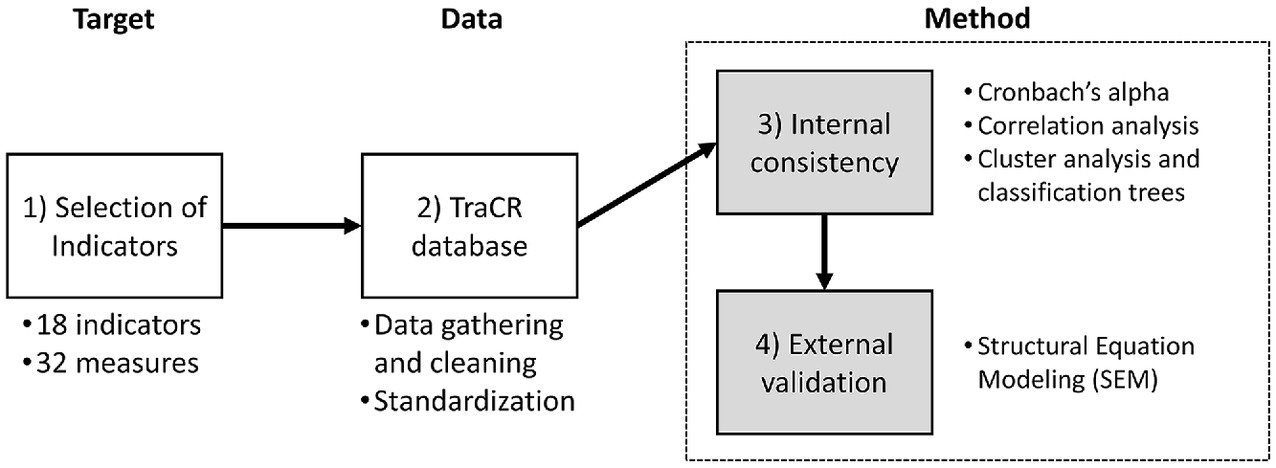

Previous frameworks have suggested theoretical backgrounds for selecting indicators and identifying commonalities across indicators. Then, a handful of dependent variables were tested in journal articles related to disaster impacts. The consistency between indicators and the overarching empirical aspects of community resilience regarding indicators and dependent variables remains in question. The main contributions of this study are comparing several internal consistency test methods used in previous frameworks, proposing an indicator validation method to overcome the research gaps in the literature, and finally, identifying indicators with remaining consistency and validation issues.

Internal Consistency Assessments

The first phase of this study is internal consistency assessments to explore the reliability of indicator selections. The motivating questions are: (1) does internal consistency exist within the indicators chosen for this study, (2) does the selection of indicators change estimates of county characteristics, and if so, and (3) which indicator drives the estimates? Importantly, this study explores consistency of both indicators and measures, which is an extra step that is needed when more than one measure can be used for an indicator. Whereas prior works acknowledge this issue, it is rarely operationalized in the internal consistency phase. The extra analysis is needed because if measures for the same indicator are in fact measuring different constructs, then each measure could result in inconsistent or unreliable estimation of shared community characteristics.

To solve these questions, this study conducts several test methods that complement each other: (1) Cronbach’s alpha analysis to check the overall consistency of all 18 indicators and consistency within each indicator for indicators with multiple measures, (2) correlation analysis to compare relationships between each pair of measures, (3) cluster analysis to identify expected differences between the estimations of community resilience, and (4) a classification tree to indicate the key drivers of cluster outcomes.

Cronbach’s alpha (

Cronbach 1951) and correlation analysis are conventional testing methods of internal consistency (

Peacock et al. 2010). The acceptable alpha values showing internal consistency range from 0.70 to more than 0.95 (

Tavakol and Dennick 2011). However, offering an alpha is not sufficient to qualify the internal consistency of measures because a high alpha can also be achieved simply by increasing the number of items (

Acock 2013). To mitigate this issue, correlation coefficients were used to compare the level of internal consistency for each measure. Instead of presenting a correlation table showing pairs of relationships, this study listed the number of correlation coefficients with an absolute value higher than 0.3 (weak relationship and higher) for each measure as a way to summarize their internal consistency regardless of the signs of the coefficients. Both analyses were based on 3,009 counties in the conterminous United States due to missing observations and listwise deletion (a 93.1% coverage based on the total of 3,230 counties).

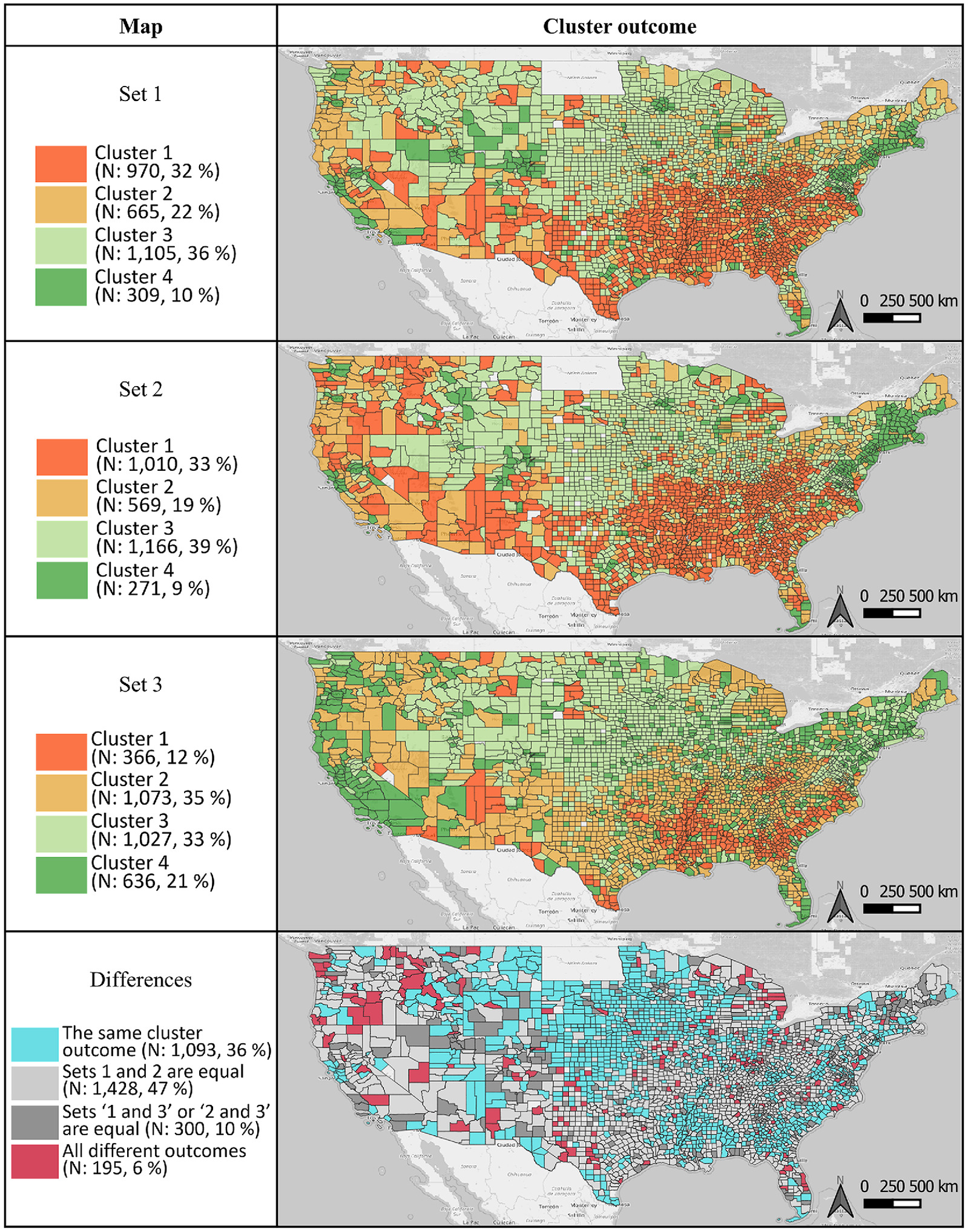

The next question is whether the selection of measures causes inconsistency in community resilience estimates. Cluster outcomes are compared with identify patterns of community resilience characterization with different arrays of measures. If cluster outcomes are sensitive to measure choices, then theoretical validation efforts on indicator selections may not be enough to guarantee coherent estimations of community resilience. Table

2 lists the selected arrays of measures for three sets. These sets represent a hypothesized selection of measures when estimating community resilience. Sets 1 and 2 consist of one measure for each indicator. Specifically, set 1 contains measures with the highest internal consistency based on the correlation coefficients. Similarly, set 2 contains measures with the second-highest internal consistency. Set 3 contains seven test measures (under the indicator

Test measures in Table

1) in addition to eight commonly used measures that are used for both sets 1 and 2 (highlighted text in bold). In this way, the estimated cluster outcomes represent the results by the measures within the structure of 18 commonly used indicators (sets 1 and 2), and by the not commonly used measures (set 3). Due to the missing observations in each indicator, 3,049, 3,016, and 3,103 counties in the conterminous United States were included in sets 1, 2, and 3, respectively (94.4%, 93.4%, and 96.1% coverages based on the total of 3,230 counties).

A two-stage clustering procedure is conducted: first, Ward’s hierarchical method to find group centroids (

Ward 1963), and second, using these centroids as the initial seed points, a k-means cluster analysis (

Lloyd 1982) estimates the formation of the clusters. The cluster outcomes can represent several county groups of

similar characteristics by minimizing the distances from the nearest cluster center. This procedure is advocated in many studies because it tends to produce clusters with roughly the same number of observations with improved globally optimal formations (

Rovan and Sambt 2003). For each cluster analysis, four groups of clusters were estimated because the scree plots of the within sum of squares (WSS) and proportional reduction of error (PRE) coefficients indicated four to six groups, and cluster outcomes with five or more groups generated underused groups.

A classification tree is a tree-structured predictive model of binary decisions (

Breiman et al. 1984). Classification tree analysis identifies measures that are most strongly associated with the outcome groups by minimizing the variability within each group. The classification tree algorithm is applied to the cluster outcomes to identify key measures dividing cluster groups by utilizing the Stata and R modules (

Gareth et al. 2013;

Cerulli 2019). Three classification trees were derived by each set of indicators based on the optimal pruning method after testing specified sizes and mean square errors. The measures selected for the first and second nodes play a decisive role in dividing the cluster groups. For the external validation assessment, the selected measures in sets 1 and 2 were employed to generate the latent community resilience variable grounded in the perspective of commonly used indicators.

External Validation Using Structural Equation Modeling

The second phase of this study is external validation. Validity assessments need to be tested by examining how well the indicators and measures estimate observed community resilience outcomes as dependent variables. Structural equation modeling (SEM) can combine indicators and measures as a hypothetical construct—a latent variable—and test causal assumptions and interrelationships through a system of equations (

Ullman and Bentler 2012).

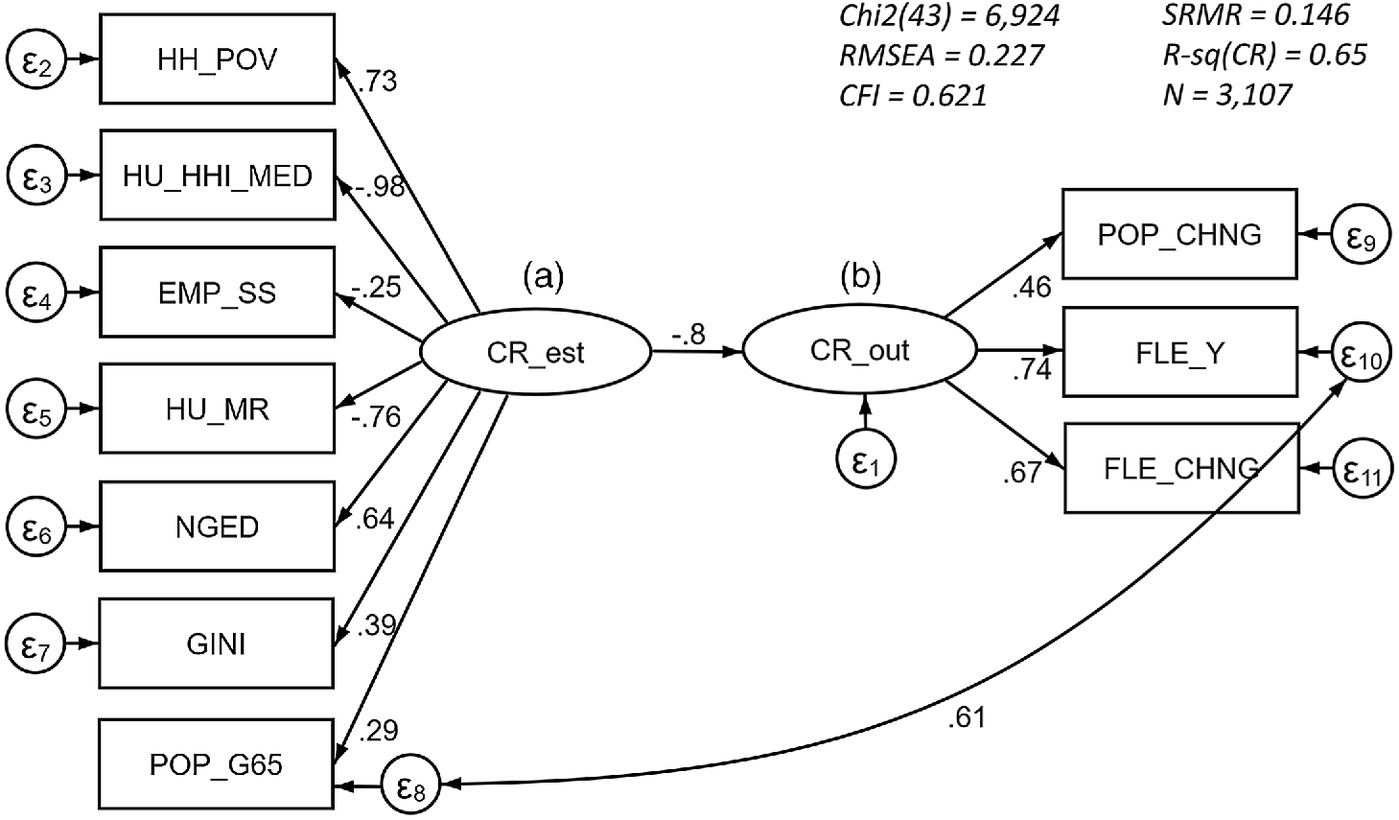

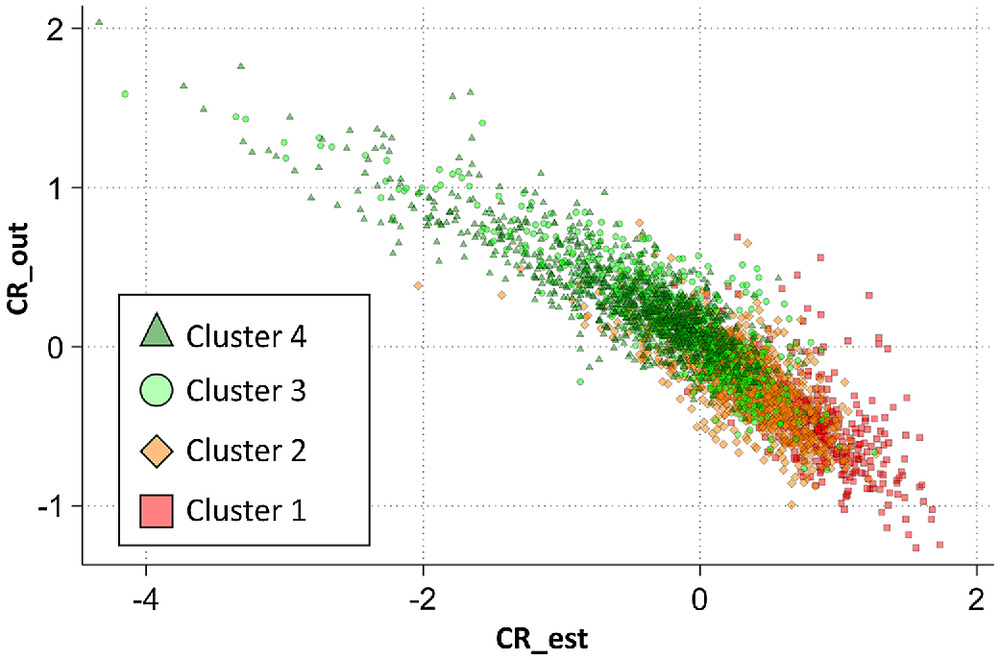

The overarching goal of this study is to develop a simplified example of a validation assessment method with respect to commonly used indicators in the form of multiple observed covariations. Commonly used indicators and measures can be both a cause and effect of community resilience. However, for an SEM solution, including complex interrelationships between the indicators and the dependent variables will dilute the validation analysis. Furthermore, whereas a crucial aspect of resilience is the ability of systems to mitigate and recover from impacts of stressors, there is a lack of empirical evidence and systematic measures suggesting causal mechanisms between indicators and longitudinal behaviors associated with community resilience. Therefore, this study employs a straightforward modeling design with: (1) one latent variable representing an estimated community resilience by a construct of commonly used indicators, and (2) another latent variable representing an outcome of community resilience by a construct of proxy dependent variables. This analysis is aimed at assessing construct and predictive validity for test sets of community resilience indicators and measures. Construct validity is assessed by examining whether and how each indicator is related to the latent variable. Predictive validity is assessed by the equation-level goodness-of-fit measure, r-squared, to illustrate how much variance this model can explain between two latent variables. Fig.

3 in the results section shows the structure of these latent variables and related measurements.

The SEM utilizes the indicators highlighted by the classification tree analysis to construct a latent independent variable. Seven indicators were included in the classification trees by sets 1 and 2 (poverty, income, regional economic vulnerability, housing cost, education, inequality, and age-based susceptibility); therefore, corresponding seven measures were selected for the latent variable (a), estimated community resilience (household poverty, median household income, service sector employment, median rent, no high school diploma/GED, Gini index, and population 65 years and older). The number of observations is 3,107 counties in the conterminous United States (a 96.2% coverage based on the total of 3,230 counties).

On the other side, a set of proxy dependent variables can serve as the latent variable (b), outcomes of community resilience. Previous validation studies have utilized a multivariate research design estimating a single disaster-oriented dependent variable at a time by employing several measures of community resilience that are related to acute stressors following disaster events [e.g., property damage (

Burton 2010), estimated losses (

Schmidtlein et al. 2011), disaster-related death rates (

Peacock et al. 2010), and food and shelter needs (

Crowley 2021)]. In these previous studies, the community resilience dependent variables were primarily focusing on the robustness aspects of community resilience for disaster-impacted communities after major disaster events. Accordingly, these dependent variables were more suitable for a relatively small geographic area, an adjacent group of communities sharing local contexts with comparable levels and types of disaster records.

This study aims to develop validated indicators and measures to account for community-scale resilience outcomes that can include impacts of both acute and chronic stressors over time. Using a disaster-oriented dependent variable for a national-level study can mislead the estimation of community resilience due to the geographically unbalanced frequency and intensity of major disaster events. For example, the flood exposure measures have skewed distributions due to the many counties with zero or very low aggregated values from 2000 to 2016.

The assessment methodology is intended to be applicable to communities during blue skies and after hazard events. Several proxy measures can be considered as an example of comprehensive dependent variables. Variables related to overall county characteristics, such as disease related morbidity (

Dillard et al. 2013), life expectancy (

Gall 2007), and changes in population and occupancy (

Myers et al. 2008;

Finch et al. 2010), offer an extended view of community resilience that can address both acute and chronic stressors within each county’s longitudinal trend. These types of variables are suited for illustrating levels of community resilience regardless of the types and intensities of previous or expected disaster events or even without considering disaster events.

This study focuses on the population and life expectancy as quantity and quality measures of community resilience outcomes. The first proxy measure is population change. Many cities in the United States have faced urban depopulation since the 1950s as a consequence of deindustrialization and subsequent job loss and outmigration (

Hollander et al. 2009). In general, for a local municipality, loss of population has been reported as a signal or a causal factor of an undergoing structural crisis rooted in economic transformations (

Wiechmann and Pallagst 2012). Myers et al. (

2008) and Finch et al. (

2010) have utilized changes in population and housing vacancy/occupancy as an outcome of social vulnerability after disaster events. Regarding the resource allocation or prioritization for community planning and development, population is also a criterion for distributing federal and state-level entitlement programs competitively. For example, Community Development Block Grant (CDBG) uses two formulas, including the population and population growth rate variables for funding allocations (

Jaroscak 2021), and the distribution of Surface Transportation Block Grant Program (STBG) is based on the relative share of local municipalities’ population (

Kalla 2022).

The second and third proxy measures are female life expectancy (FLE) and change in FLE. Before the 1950s, reductions in the death rate at young ages prompted the rise in life expectancy. On the other hand, after the 1950s, most of the gain in life expectancy was due to improvements in mortality after age 65 (

Oeppen and Vaupel 2002). The gain is related to advances in many interrelated social systems, such as income, education, crime, diet and nutrition, access to health care, hygiene, and medicine (

Oeppen and Vaupel 2002;

Riley 2001). Accordingly, life expectancy can offer information about the well-being of a county in the United States (

Kulkarni et al. 2011;

Correia et al. 2013;

Dwyer-Lindgren et al. 2017), describing the geographic variation of health outcomes related to overall socioeconomic and race-ethnicity characteristics. In the fields of health science and sociology, life expectancy has served as one of the fundamental topics of interest for understanding biological and especially, social determinants, which are principal sources shaping the diverging health needs rooted in social inequality (

Gutin and Hummer 2021). In terms of community resilience, Gall (

2007) tested female and male life expectancies that account for health and quality of life to assess social vulnerability indices.

The latent dependent variable (b) consists of percent changes in population, female life expectancy, and percent changes in female life expectancy from 2000 to 2016 (

US Census Bureau 2016). In this period, population growth amounts to 6.3% per county on average. The average female life expectancy is 79.7 years, and it has increased by 1.4% in the same period.

Using the SEM, a more comprehensive assessment of indicators can be conducted. Unlike multivariate regression models in the previous literature, the SEM allows for testing hypothesized patterns between latent variables, offering an option to control multicollinearity, and addressing differential importance on the aspects of the indicators and dependent variables. In addition, the latent structures mitigate the imperfect nature of each measure by taking into account measurement errors. The SEM utilized an intercept-suppressed option for each measure to reflect the zero mean values of the z-score standardized variables.