Transfer-Learning and Texture Features for Recognition of the Conditions of Construction Materials with Small Data Sets

Publication: Journal of Computing in Civil Engineering

Volume 38, Issue 1

Abstract

Construction materials undergo appearance and textural changes during the construction process. Accurate recognition of these changes is critical for effectively understanding the construction status; however, recognizing the various levels of detailed material conditions is not sufficiently explored. The primary challenge in the detailed recognition of the conditions of the material is the availability of labeled training data. To address this challenge, this study proposes a novel state-of-the-art deep learning model that leverages transfer learning, utilizing the pretrained Inception V3 to transfer knowledge to the limited labeled data set in the construction context. This enables the model to learn meaningful representations from the limited training data, enhancing its ability to accurately classify material conditions. In addition, gray-level co-occurrence matrix (GLCM)–based texture features are extracted from the images to capture the appearance and textural changes in construction materials, which are then concatenated with the transferred convolutional neural network (CNN) features to create a more comprehensive representation of the material conditions. The proposed model achieved an overall classification accuracy of 95% and 71% with limited (208 images) and very small (70 images) data sets, respectively. It outperformed different experimental architectures, including CNN models developed using limited data with and without augmentation, CNN model with data augmentation and transfer learning, separate models using local binary pattern (LBP) and GLCM texture features with super learners trained using augmented limited data. The findings suggest that the proposed model, which combines transfer learning with GLCM-based texture features, is effective in accurately recognizing the conditions of construction materials, even with limited labeled training data. This can contribute to improved construction management and monitoring.

Practical Applications

There are several practical applications of the proposed model combining CNN architecture with GLCM textures in construction industry. The primary applications are in activities such as quality inspection and progress monitoring. By analyzing images of different material conditions, such as concrete, plaster, or masonry walls, the model can be used to automatically detect defects or inconsistencies. This enables construction practitioners to ensure the quality of their structures, detect issues early on, and make informed decisions for maintenance and repair. Additionally, the model can be used to monitor the progress of construction projects by analyzing images to track the status and completion of different building components to estimate delays or cost overruns comparing with the expected material condition at a given time of the schedule. Moreover, because the model does not depend on large training data set, it enables construction managers to develop their project specific data sets and automate material condition detection and monitoring.

Introduction

In digitizing the process of construction, images play an essential role. However, automatically making sense of collected images is a problem that researchers have tried to address through various solutions in the past few decades. The computer vision and machine learning research domain have developed multiple practical approaches to classify and detect specific objects from a given image data set. In general, computer vision and machine learning methods have been explored in the construction research domain to automate data collection, processing, and interpreting activities (Baduge et al. 2022). In the past few decades, researchers have used various machine learning and computer vision methods for improving safety (Fang et al. 2020a), construction project monitoring (Pal and Hsieh 2021), reducing construction waste (Davis et al. 2021), and optimizing cost and project duration (Mahmoodzadeh et al. 2022).

In the construction industry, image classification and construction element segmentation have been popular research subjects (Akinosho et al. 2020; Fang et al. 2020b; Khallaf and Khallaf 2021; Mostafa and Hegazy 2021). These efforts contributed to developing effective methods of understanding the construction environment in terms of general objects such as columns, beams, openings, HVAC elements, workers, and machinery. Moreover, detection and recognition in the construction site can go further into understanding materials such as concrete, bricks, dirt, timber, and rocks. However, understanding the existence of the elements alone does not represent the complete state of the construction. This is primarily because most of the elements in the construction site undergo textural changes as the work progress. For instance, a wall construction could start by laying bricks and applying wire mesh and multiple plastering layers. Each plastering layer comes with a distinct texture. Concrete elements [Fig. 1(a)] could be chiseled [Fig. 1(b)] to receive mortar plastering layers [Fig. 1(c)], where chiseled surfaces have distinct textural properties compared to flat or plastered surfaces. Recognition of these textural variations is not sufficiently explored in the construction industry.

Recognition of the textural variations in the construction industry is important for two main aspects. First, causing the change of an element from one material condition to another requires an effort of a crew or a worker. For instance, chiseling a set of concrete columns could take several hours for a worker. Similarly, applying a single coat of plastering could require several worker-hours and resources. The accumulation of delay and material waste on these specific subtasks results in budget overrun and delay of the whole project. In addition to creating a potential to micromanage activities at a subtask level, understanding textural variations of the construction elements enables early-stage detection of quality deviations and defects.

Research proposals have also achieved promising results in automatically detecting edges, drywall patches, cracks, electric sockets, and similar small and specific refined cases (Khallaf and Khallaf 2021; Mahmoodzadeh et al. 2022). However, these cases have distinct patterns and features. Moreover, most of these methods depend on the availability of large labeled data sets to define patterns with high confidence. In recent years, the increasing affordability of reality capture technologies is making the data availability problem obsolete. However, labeling a large image set of contents that require special skills in identifying the specific markings and nonuniform patterns is difficult and very time-consuming.

Adequate image data representing all of the possible construction material’s textural variations are not widely available to train classification and detection algorithms (Mengiste et al. 2022). Therefore, this research proposes an image recognition algorithm that performs grain-level texture classification on limited data sets fusing transfer-learning and texture-based features. To that end, this research investigates whether, instead of adding more imitated features to a given small data set, reducing the unnecessary features from the given images potentially increases the ability of classifiers to define representation patterns of certain material conditions. Therefore, in this paper, images were preprocessed to reduce extra features and retain the defining textural information using texture extraction and transfer-learning methods.

Motivated by the limitations in existing studies, this paper proposes a deep learning–based methodology to handle the limited data situations. The main contributions of the current study are summarized as follows:

•

A state-of-the-art-art model that combines the transferred high-level features using transfer learning with texture-based features to classify construction material conditions is proposed. Adding the concatenated features improves the interpretability of the model and enhances the overall performance of the model.

•

Implementation of a method that can detect the conditions of construction materials even with limited data.

To test the proposed methodology, a case study consisting of different material conditions was used to evaluate the performance of different models. The material conditions are not meant to be associated with a particular construction task.

The rest of this paper is organized as follows. “Literature Review” presents the state of the art in image-based material classification, focusing on cases of data limitation and texture understanding. “Methodology” covers the methodology for developing the different alternative classification algorithms and the comparison criteria. “Case Study” shows the results obtained from the experiments using the proposed algorithms on construction site images. “Results and Discussion” discusses the results and findings. “Limitations” highlights concepts that could improve the current work and proposes future considerations. “Conclusion and Outlook” forms conclusions.

Literature Review

Image data can be sourced directly from the construction site or scraped from the internet. Regardless of the source, labeling specifics of the material surface stands out as a potential challenge. Wang et al. (2019) developed an extensive image database using online crowdsourcing where worldwide crowds joined in the image labeling task. This process effectively produces an extensive image database with coarse building elements. Databases such as Yu et al. (2015) were developed following a similar process. However, when working with detailed construction material conditions, identifying material texture becomes difficult for a nontrained crowd.

Automating the labeling process based on predefined information is one way of tackling the labeling challenge. For instance, Braun and Borrmann (2019) proposed an automatic generation of labeled images from point cloud data. In their approach, preset information was extracted from the building information model (BIM) to be aligned with a point cloud and performed inverse photogrammetry to generate images with labeled elements. Their method provides images with labels that are only as detailed as the level of development (LOD) of the BIM. In most cases, the detailed condition of a construction material or element is not modeled in four-dimensional BIM, even when working with high LOD BIMs. This is because BIM models usually adopt generic computer-generated textures for the graphic representation of elements, which makes this approach challenging to apply in achieving automatic labeling of images according to the material conditions.

Data Augmentation

Data augmentation artificially inflates (i.e., increases) the training data set to address the overfitting of an algorithm. Data wrapping or oversampling are the two most frequently used approaches for data augmentation. Data wrapping includes the transformation of the available images into additional data sets of the same label by adjusting the color and feature geometry. Alternatively, feature augmentation is performed by mixing multiple images of the same label (Shorten and Khoshgoftaar 2019). A comprehensive summary of frequently used data augmentation techniques is presented in Table 1.

| Data augmentation technique | Sources | Disadvantages |

|---|---|---|

| Geometric transformations: rotate, zoom, shear, translation, flipping | López de la Rosa et al. (2022), Ogunseiju et al. (2021), and Shorten and Khoshgoftaar (2019) | It could be misleading when used for contents that change labels when rotated, scaled, or translated; for instance, text recognition. |

| Color space transformation: applying color filters to modify the RGB values | Shorten and Khoshgoftaar (2019) | It could be misleading when applied to discreet objects based on their RGB values. |

| Noise injection: the process of generating data sets by adding noise through various methods, such as Gaussian noise | Moreno-Barea et al. (2018) | It could be computationally expensive when applied to a relatively noisy data set. |

| Mixing images: pairing multiple data points to produce a synthetic data set | Inoue (2018), Liang et al. (2018), and Summers and Dinneen (2018) | Despite multiple experiments conducted in the literature, it is not fully understood why it increases performance. |

| Brightness: increase or decrease the brightness of a given image data | Kandel et al. (2022) and Yang et al. (2022) | Although there is literature that claims the elimination of overfitting with the application of brightness-based data augmentation (Yang et al. 2022), there is other literature that claims brightness generally degrades performance (Kandel et al. 2022). |

| Deep learning based: application of deep learning to mimic real data and generate a synthetic set | Nalepa et al. (2019), Shorten and Khoshgoftaar (2019), and Wang et al. (2017) | Achieving convergence could be challenging for some types of images. Moreover, it can produce similar images (collapse mode problem), reducing the augmentation impact to generalize. |

Data augmentation has been utilized in the field of construction research to increase the performance of deep learning algorithms. Ottoni et al. (2022) developed deep learning and regression models for recognizing building facades from 390 source images. Applying rotation, flipping horizontally and vertically, shearing, shifting height and width, and zooming the source images increased the data set to around 32,000 training images. In their study, the data argumentation substantially improved the potential of all the models. Similarly, Baek et al. (2019) performed a generative adversarial network–based data augmentation to improve the performance of a binary classifier of various construction equipment. However, building facades and construction equipment have basic shapes and easily definable forms. The augmented images can keep the basic form and manipulate the remaining semantic information to inflate the image data size. Construction materials could bear complete randomized patterns where the form of the patterns may not provide a unique designation of a specific label.

Transfer Learning

Transfer learning is one of the go-to approaches when training a machine learning model with a limited data set (Ng et al. 2015; Zheng et al. 2020). In general, transfer learning is retraining a well-trained model for one subject area to serve another subject domain (Bozinovski 2020). The construction industry has explored the transfer-learning method in response to the limited availability of training data (i.e., small data sets) (Yang et al. 2020). Zheng et al. (2020) proposed a technique based on transfer learning and virtual prototyping to overcome real-life image data unavailability to train a deep learning model and monitor the progress of modular constructions. In their study, they developed a mask regions with convolutional neural network (R-CNN) neural network architecture from synthetic image data sets. Transfer learning was applied to avoid overfitting and adopt the model developed on synthetic data to real-life conditions.

Transfer learning is also frequently used to transfer knowledge from deep neural networks (DNNs). For example, Yang et al. (2020) proposed a civil infrastructural crack detection method using deep convolutional neural networks (DCNNs) such as Visual Geometry Group 16 (VGG16) and transferring the knowledge to the subject domain by adding fully connected layers and parameters. Similarly, Dais et al. (2021) conducted a comprehensive study of the performance of deep learning models with transfer learning to segment masonry cracks classification and segmentation. Their comprehensive study was conducted at the pixel and patch levels using various pretrained networks such as VGG, Inception V3, MobileNet, ResNet, and DenseNet. Their work revealed that transfer learning effectively boosted the performance of these pretrained networks.

The choice of the deep learning network architecture plays a critical role in the transfer-learning performance. In image classification algorithms, convolutional neural network (CNN) architectures such as ResNet, VGG, Inception, DenseNet, MobileNet, GoogLeNet, and AlexNet were commonly considered for defect, hazard, construction materials and equipment, and progress state detection (Pal and Hsieh 2021). Recent literature investigating the performance of different CNN architectures is summarized in Table 2. For example, the accuracy of the model using GoogLeNet reported in Shang et al. (2018) was 93.7%. Although VGG resulted in relatively higher accuracy when applied with adequate data sets (Dais et al. 2021; Kim et al. 2021; Su and Wang 2020), data limitations caused overfit (Chen-McCaig et al. 2017) when subjected to a limited data set. Shang et al. (2018) experimented with the effect of data size on the performance of CNN architectures. As a result, VGG and Inception performed relatively well compared to similar methods.

| CNN architecture | Articles comparing/working with different CNN architectures | ||||

|---|---|---|---|---|---|

| Dais et al. (2021) | Su and Wang (2020) | Kim et al. (2021) | Chen-McCaig et al. (2017) | Shang et al. (2018) | |

| ResNet | 91.6 | — | 97.0 | — | 92.2 |

| VGG | 88.0 | — | 99.8 | 100 | 98.6 |

| Inception | 88.4 | 97.1 | 99.8 | 94.6 | 95.5 |

| DenseNet | 92.4 | 97.4 | — | — | — |

| MobileNet | 95.3 | 96.6 | — | — | — |

| GoogLeNet | — | — | — | — | 93.7 |

| AlexNet | — | — | — | — | — |

On the other hand, one of the essential primary parameters when selecting CNN architecture is the number of trainable parameters. This is crucial to define the speed and the computational capacity requirement. Simon and Uma (2020) reported that Inception V3 has advantages in factorizing features into small kernel size convolutions, and as a result, it produces only 6.4 million trainable parameters. Compared with VGG16, Inception V3 has more than 120 million fewer parameters and about 13 million parameters less than DenseNet201. As a result, in this research paper, Inception V3 was adopted for feature extraction using transfer learning.

Texture-Based Features

Unlike classical machine learning methods, current deep learning methods extract features and form generalizations based on textural patterns. The process of generalizing is free from human control and considers multiple dimensions (Das et al. 2020). This is a limitation when considering a small data set with insufficient instances to build generalizations on descriptors and patterns.

Defining a material from its detailed grain size image is difficult with a limited image data set. However, researchers experimented with removing extra information from the image data and exposing valuable patterns for the classification algorithm. Kalidindi (2020) uncovered the advantages of extracting special features from material images to help machine learning algorithms focus on specific microstructural properties. The process helps reduce computational expenses and also enhances the performance of the models. Limited data sets benefit from feature engineering. Dai et al. (2020) proposed a machine learning method of characterizing materials from limited data sets by engineering features. In their work, they compared the accuracy of the proposed model when trained with original data sets and when trained with only important descriptors removing the redundant ones. The results show that the accuracy improved significantly from 75% to 86% maximum cross-validation accuracy.

Extracting the microstructural patterns from an image has been performed using various methods, from applying filters of specific properties over the raw image (Zhu et al. 2005) to the relative difference between the neighboring pixel values (Singh et al. 2022). Image texture extraction using filter banks such as Gabor (Jain and Farrokhnia 1991) or Leung-Malik (LM) (Varma and Zisserman 2009) is relatively straightforward. However, the increase in the number of variations in the transformed filters in the filter banks results in a computationally time-consuming process (Patlolla et al. 2012). Moreover, texture extraction can be performed by applying morphological operations (Soille 2002) to the natural images. The morphological operation usually considers the color attribute of an image to determine the shapes and edges of textural marks. This operation can be relatively faster than filter-based methods; however, the dependency on the color values limits its applicability to images taken from uncontrolled illumination environments, such as construction.

On the other hand, instead of computing the texture depending on the global pixel color values, relative differences in the neighboring pixel attributes could result in the ambient illumination invariant texture. The recent adaptations of local binary pattern (LBP) and gray-level co-occurrence matrix (GLCM) methods for texture analysis provided promising results in robust texture extraction. In this research, the LBP (Huang et al. 2011) and GLCM (Ojala et al. 2002) methods were adopted for texture extraction.

Texture features are essential when there is no importance in the color and shape features. That means fine granularities and projections are more important in pattern recognition. GLCM and LBP are texture features used in this study, along with the CNN-based features, to get more robust features for image recognition.

Local Binary Pattern

The LBP operates in a random set of eight neighboring pixels where the center pixel is compared with the surrounding pixel values (Singh et al. 2022). Moreover, the method is binary, meaning that depending on the relative value of the neighboring pixels with the central pixel, they are assigned values of 0 or 1. There are multiple variants of LBP that are created to resolve certain limitations of the traditional LBP. Traditional LBP, for instance, had limitations in determining the texture of a flat surface. Therefore, Heikkilä et al. (2009) proposed a center-symmetric LBP (CSLBP). In CSLBP, the span of pixel variation search is larger. This is because not only the center pixel is compared with the surrounding pixels, but also the two side pixels of the neighborhood are compared with the central and the neighboring pixel arrangements. Similarly, to reduce the effect of noise in the image data on the quality of the texture extracted using LBP, Rassem and Khoo (2014) proposed the local ternary pattern (LTP). In LTP, instead of dwelling in binary representations of patterns, the pixel differences are coded as 1, 0, and based on a certain threshold of the neighborhood pixels from the central value.

The most recent adoption and improvement of LBP, attractive and repulsive center-symmetric LBPs (ACS-LBP and RCS-LBP), was developed by El Merabet et al. (2019) to increase the robustness of the method against illumination variations and reduce computational complexity. Researchers in the construction industry utilized LBP and its variants to detect concrete defects (Nguyen and Hoang 2022), drywall patches and small construction element detection (Hamledari et al. 2017), pitting corrosion detection (Hoang 2020), and many more. Nguyen and Hoang (2022) compared the performance of multiple LBP variant texture descriptors on their performance in detecting concrete spalls. Their experimental results show that ACS-LBP and RCS-LBP have a promising capacity to detect concrete spalls from construction site photos. In this research, we adopted ACS-LBP and RCS-LBP to extract texture from construction material images taken under uncontrolled site illumination conditions.

Gray-Level Co-Occurrence Matrix

GLCM is a texture-based method that examines the spatial arrangement of pixels. It works by calculating the pixel patterns that exist in specific combinations of values and in a specified spatial arrangement in an image, creating a GLCM, and then extracting statistical measures from this matrix.

Like LBP, the GLCM texture analysis method computes the relative characteristics of neighboring pixels. However, GLCM computes the attributes of five specific texture features (entropy, contrast or inertia, correlation, energy, and homogeneity) (Conners and Harlow 1980; Mutlag et al. 2020). These texture features are generally different arrangements of parameters, such as distance and directional angles between pixels (Singh et al. 2017).

The performance of GLCM has been widely explored in medical image processing (Durgamahanthi et al. 2021; Ghalati et al. 2022) and materials (Prasad et al. 2022). GLCM has been explored in the construction domain for asphalt pavement raveling detection (Hoang 2019), crack severity level detection (Wang et al. 2021), HVAC defect detection (Wang and Su 2014), and detecting wearing in drill bits (Wang and Su 2014). Compared with LBP, GLCM had superior performance in various publications (Öztürk and Akdemir 2018; Sthevanie and Ramadhani 2018). Therefore, for this study, the proposed model only combined GLCM texture with CNN features to increase the generalization performance when subjected to small data.

Transfer-Learning and Texture-Based Features

Although it is not widely studied in the construction research domain, multiple applications have been proposed in areas such as medical image processing. For instance, Aswiga et al. (2021) proposed a method that takes advantage of both transfer learning and feature extraction to perform a refined classification of images. In their approach, CNN transfer learning and feature extraction are independently executed one after another for the two-layer classification. The result from the feature extraction was used as input to the transfer learning after it was processed through an intermediary domain. However, instead of addressing data limitations, the goal of merging texture features with transfer learning in their study was to improve performance and reduce false negatives. Similarly, Abraham (2019) combined GLCM feature extraction with a CNN model to increase performance. In their study, they applied data augmentation to increase the available medical data to train the CNN model and perform a GLCM feature clustering. The application of the GLCM clustering reduced the number of false positives and increased the model sensitivity.

Although these methods produced promising results, directly adopting them in the construction industry needs further investigation. This is primarily because, unlike the construction site images, the proposed methods in both Aswiga et al. (2021) and Abraham (2019) were trained on medical images (mammogram and blood smear images), which are produced in a controlled environment. The proposed method concatenates CNN features that are enhanced by transfer learning to boost its confidence in pattern recognition even from images that are taken in an uncontrolled environment.

Methodology

In this work, a state-of-art model called Inception V3 and a DNN were used for the feature extraction. The proposed model can be divided into three phases: (1) extracting domain feature maps using transfer learning, (2) using GLCM texture features with a DNN, and finally (3) combining features for classification. The complete process is presented in Fig. 2.

In the first phase, we fine-tuned an Inception V3 (Google Cloud 2022; Szegedy et al. 2016) model by initialing the transferred parameters trained in the general domain (ImageNet classification data set) with customized fully connected layers. We optimized the hyperparameters, such as learning rate and batch size, to obtain the highest accuracies on the data set. Then we extracted the vectorized feature maps before the softmax (classification) layer, assuming the extracted features have knowledge in a specific domain and transferred the knowledge to the construction domain to boost the performance of the model, where the knowledge presented in the vectorized feature maps learned in the first phase.

In the second phase, we derived several GLCM features like contrast, correlation, energy, homogeneity, and dissimilarity to represent the texture of an image. In this step, these GLCM statistics were passed to the DNN to extract the GLCM-based DNN features.

The transferred feature maps and GLCM-based DNN features were concatenated in the third phase. These combined features were trained with the fully connected layers for the target materials classification. The two feature sets were combined so that the transferred knowledge from an enormous general domain, fine-tuned with the limited construction domain and pixel-level texture features, can enhance the classification performance of the limited data set in the construction domain. Specifically, the concatenated vector representation of each image became the super features to overcome the data scarcity limitation in the construction domain.

The network architecture (Fig. 3) used in this study has two branches that simultaneously fed the pretrained CNN Inception V3 and the texture-based DNN features. The resulting network can accept multiple inputs, including numerical, categorical, and image data, all at the same time. Raw construction material images were fed into Inception V3, and the GLCM numerical data were introduced to the DNN. The outputs of CNN Inception V3 (1,024 dimensions) and DNN (256 dimensions) are concatenated to produce a 1,280-dimensional vector. We then applied two more fully connected layers. The first layer had two nodes followed by a rectified linear unit (ReLU) activation, while the second layer had only a single node with a softmax activation (i.e., our prediction). The fully connected layer in the proposed architecture utilized the softmax activation function at the outer layer.

Case Study

To test the proposed model, an experiment was conducted using a data set collected from a construction site at the New York University Abu Dhabi campus. Close-up images of the construction site were gathered from casted concrete columns, concrete block walls, different layers of plastering finishes, wire mesh applied under the plastering, chiseled concrete elements, and gypsum walls. These surfaces were selected to represent detailed construction appearance conditions that are usually overlooked. The number of images collected was also imbalanced, which could also challenge the performance of the model. The image size distribution is presented in Table 3. The model was challenged by using a reduced number of images (i.e., very small data size column in Table 3).

| Material condition | Total collected data size (limited data) | Very small data size |

|---|---|---|

| CMU wall | 24 | 10 |

| Chiseled concrete | 49 | 10 |

| Concrete | 18 | 10 |

| First coat plaster | 37 | 10 |

| Gypsum | 26 | 10 |

| Mesh | 25 | 10 |

| Second coat plaster | 29 | 10 |

| Total | 208 | 70 |

The images were taken using a Canon EOS 80D (Tokyo), a Nikon D5200, and a Nikon D850 (Tokyo). The NikonD850 (46 megapixels) has about twice as much resolution as the Canon EOS 80D (24 megapixels) and Nikon D5200 (24 megapixels). The D850 has better low light and maximum ISO, giving it better light and shadow control in the pictures. Moreover, the Nikon D850 has a higher number of focus points. All of the cameras were on autofocus when images were collected. The quality variation in the image set provides the models more challenge to generalize.

To account for realistic scenarios, the images were taken with various random angles, camera pauses, and illumination properties. This was intentionally done to challenge the model with small data containing significant environmental noise. Moreover, images should be taken in a close range with only one material condition in a frame. Fig. 4 shows some examples of the images taken with random ambient illumination and camera orientations. Examples shown are for concrete masonry unit (CMU) wall [Fig. 4(a)], chiseled concrete [Fig. 4(b)], concrete [Fig. 4(c)], first coat plaster [Fig. 4(d)], gypsum [Fig. 4(e)], mesh [Fig. 4(f)], and second coat plaster [Fig. 4(g)]. The data set used for this study can be found in Mengiste et al. (2023).

The size of the collected raw data (Table 3) was inflated using the Image Data Generator (Keras Team 2015) automatic data augmentation algorithm. Each original image was subjected to an augmentation process that included rotation, shear, flip, shift, brightness, and zoom, as described as follows:

•

Rotation: random angles within 90° from the original orientation range

•

Shear: slanting the image to a maximum of 20% shearing angle

•

Flip: both horizontally and vertically

•

Shift: random shifting in horizontal and vertical directions to a maximum of 20%

•

Brightness: randomly between a brightness range of 0.5 and 1.5

•

Zoom: random zooming between 0% and 20% of the original size

As a result of the augmentation process, the total set of images increased from 208 to 10,400 in the case of limited data and from 70 to 350 in the case of very small data sets.

The proposed model was evaluated against five other models, which utilized varying combinations of strategies commonly suggested in the literature for overcoming data limitations. These strategies include data augmentation, the integration of several traditional machine learning algorithms used for classification tasks (super learners), and the employment of popular texture feature recognition methods (GLCM and LBP). Furthermore, these models employed different combinations of techniques such as CNN and transfer learning. Through the examination of these models, we wanted to compare diverse techniques, spanning variances in architecture, data augmentation, transfer learning, and feature extraction. This investigation enables us to find the most effective approaches for successfully detecting construction material conditions, even with insufficient data situations. In summary, the experimental process conducted in this case study includes the following models:

•

CNN with raw data: The basic CNN architecture with a given raw data.

•

CNN with data augmentation: A basic CNN-based classifier was trained using the inflated data set (based on the process of augmentation indicated previously).

•

CNN with data augmentation and transfer learning: Transfer learning on an Inception V3 CNN architecture was developed. The original data set was augmented and inflated in size (based on the process of augmentation indicated previously).

•

LBP with super learners and data augmentation: LBP features were extracted from the augmented original image and super learners composed of various stacked traditional machine learning methods, i.e., random forest, decision tree, linear regression, Gaussian naive Bayes, CatBoost, XGBoost, AdaBoost, and similar other classic methods. This was conducted to intentionally evaluate the performance of the LBP texture features without the effect of deep learning features in the case of small data sets (data augmentation was based on the process indicated previously).

•

GLCM with super learners and data augmentation: Similar to the LBP with super learners and data augmentation experiment, GLCM features are extracted from the augmented original image, and the super learners classification model instead of LBP is developed using the extracted GLCM feature set.

•

GLCM + CNN with transfer learning (proposed model): Inception V3–based deep transfer learning is devised to extract features. At the same time, additional texture features that could strengthen the model performance effectively are extracted using GLCM.

To avoid bias during training and testing and to provide equal distribution among the different classes while maintaining the generalizability of the models, stratified sampling and regularization with early stopping were utilized.

The critical hyperparameters in all the experimental and proposed models include the number of layers, the number of neurons, batch size, activation function, optimizer, learning rate, and epochs. Traditional techniques like random and grid search may take a lot of time to train. Hence, for this study, we used Bayesian optimization to tune the parameters (Feurer and Hutter 2019).

Results and Discussion

The overall accuracy (i.e., the number of correctly classified images divided by the total number of images, multiplied by 100, for all material conditions) of the proposed model using the limited and very small data sample data sets is summarized in Table 4. All the performance measurements were conducted by splitting the data as 80% for training and validation (seen) and the remaining 20% for testing (unseen). The testing data were randomly selected and inspected for inclusiveness, distribution, and randomness. They were kept hidden (i.e., out of the box or unseen) during the training and validation. All the models, including the comparison models and the models that were developed and trained using limited samples and very few samples, were tested using the same testing data set.

| No. | Model | Limited samples | Very small samples |

|---|---|---|---|

| Accuracy (%) | |||

| 1 | CNN without data augmentation | 51 | 30 |

| 2 | CNN with data augmentation | 38 | 35 |

| 3 | CNN with data augmentation + transfer learning | 67 | 45 |

| 4 | LBP with super learners and data augmentation | 72 | 23 |

| 5 | GLCM with super learners and data augmentation | 76 | 63 |

| 6 | GLCM + CNN with transfer learning (proposed model) | 95 | 71 |

For the proposed model, with a reduction in the sample size of 81%, the overall accuracy only dropped by about 24%, which indicates a promising performance with a drastic reduction in the data used. Also, the overall accuracy of the proposed model (Model 6) outperformed by more than 19% of the remaining configurations in both cases of image sets.

Something that stands out from Table 4 is the drop in accuracy obtained with the basic CNN method combined with the data augmentation (Model 2). This low performance could be explained because data augmentation distorts essential features that could increase the prediction confidence of the classifiers. Given the size of the initial data, the resulting distortion misleads the CNN feature extraction, reducing both the precision and recall. This can be seen from comparing the first two models, where CNN is developed without and with data augmentation. The overall accuracy reveals that the performance of the CNN is degraded when trained using inflated data.

From Table 4, adding more imitated data, such as in the case of CNN with data augmentation, resulted in lower performance than CNN without data augmentations. On the other hand, focusing on the patterns and reducing the unnecessary features, such as in the case of the proposed model, resulted in a relatively higher accuracy both in cases (i.e., the cases of limited and very few sample sizes).

On the other hand, reducing unwanted details and focusing on the significant textural features boosted the confidence and the performance of the GLCM- and LBP-based models. In contrast, the proposed model introduced GLCM features into the CNN, which improved the model’s capability to recognize patterns with diverse variations and robustness to image transformation. The results show that the proposed model gives high accuracy and requires fewer learning iterations than the others.

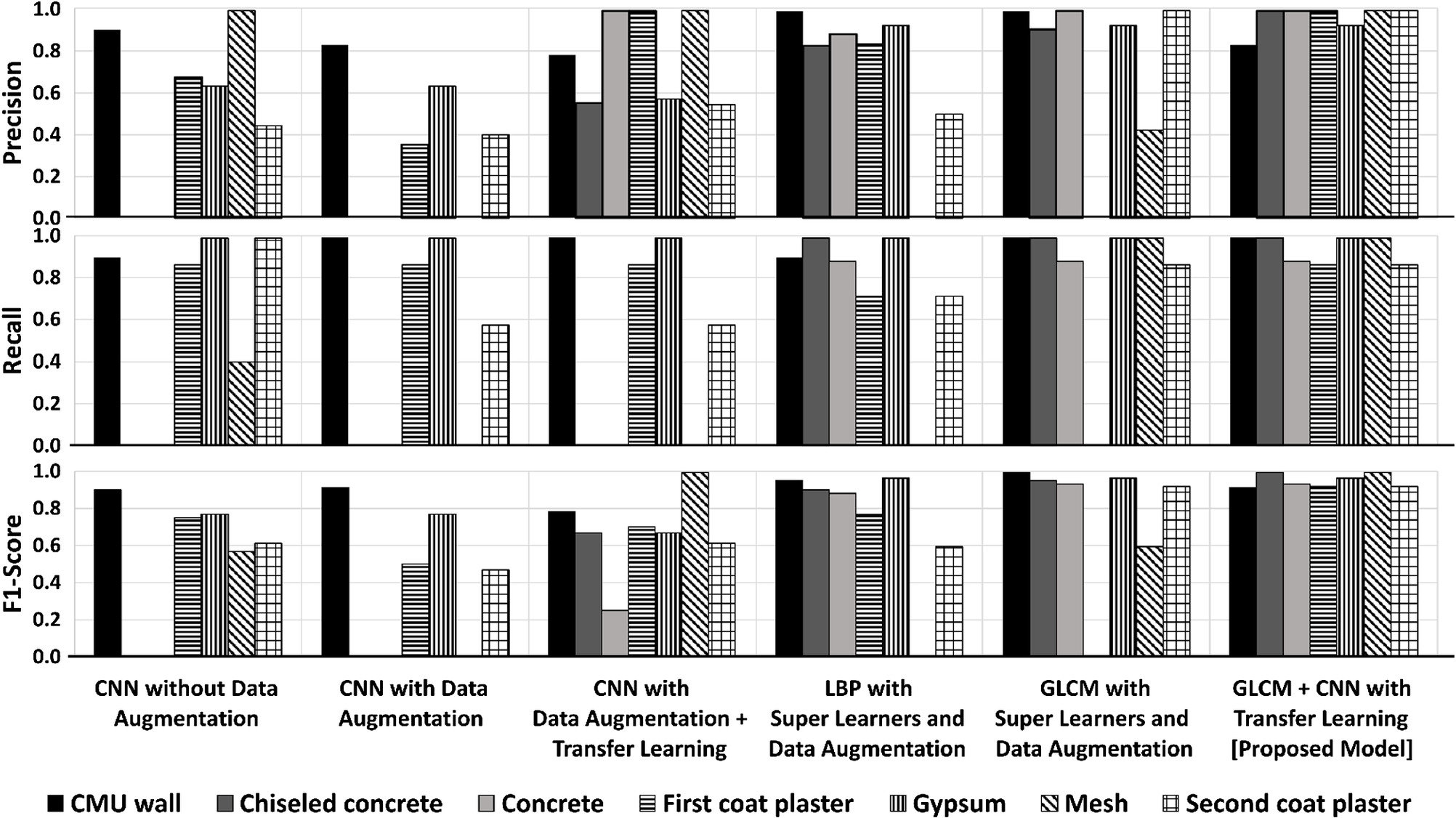

In the case of imbalanced data sets, the overall classification accuracy does not represent the full performance of the model. Therefore, to further assess the performance of each model and material conditions, the precision, recall, and were calculated (Fig. 5). In all these metrics, the proposed model outperformed the other combinations.

Although the model combining the CNN and data augmentation resulted in the lowest scores for certain material conditions (Fig. 6) such as chiseled concrete, concrete, and mesh, it resulted in relatively higher -scores for CMU wall, gypsum, first coat plaster, and second coat plaster material conditions (91%, 77%, 50%, and 47%, respectively). The CNN and data augmentation demonstrated weaker overall performance compared with the proposed model across all material conditions. The proposed model increased the average -score of the model combining CNN and data augmentation by 149%. This result indicates that the proposed model was able to capture textural features that were overlooked by the CNN-based model.

From the comparison chart in Fig. 6, the two models that do not include the CNN features (i.e., LBP with super learners and GLCM with super learners) yielded average -score values of more than 70%. Although LBP- and GLCM-based models outperformed the proposed model in recognizing CMU walls, they failed to recognize certain material conditions. For instance, the LBP-based model failed to recognize mesh and resulted in weak performance in material conditions such as second coat plastering and first coat plastering. Similarly, the GLCM-based model failed to recognize first coat plastering and resulted in weak -scores in recognizing mesh.

Moreover, the overall accuracies of LBP- and GLCM-based model were 72% and 76%, respectively (Table 4). However, for the case of very few samples, the overall accuracy of the GLCM-based model was much better than the LBP-based model (63% versus 23%, or an increase of 174%). The proposed model, which combines features from both the CNN architecture and from the GLCM textures, regardless of the data imbalance, scored higher in all the metrics compared with all the experimental models except for the CMU wall and first coat plaster material conditions where models combining LBP and GLCM with the super learners resulted in slightly higher metrics scores.

The primary reason for the lower score of the proposed model on the CMU wall could be the fact that some of the images representing the first coat plaster have CMU slightly visible behind the applied mortar, which could potentially produce misleading features from the CNN architecture and influence the classifier development from the concatenated features. This can also be verified by the high performance of the CNN-based experimental models on the CMU walls.

Limitations

The proposed model performed well using the data from the case study. However, it emphasizes the detailed textural patterns of the material surface. Small variations in the surface characteristics could have a significant effect on the final outcome. Therefore, conducting experiments on other material conditions and performing a sensitivity study of the model to assess the material condition variation levels should be considered. It is also important to investigate and control the effect of misleading features that results in relatively lower performance of the model in some specific material conditions (i.e., in the case of the first coat plaster).

Moreover, the model requires further evaluation against overfitting when subjected to images with various quality limitations. Images with significant digital noise resulting from reflection, illumination, camera lens, and sensor capacity could negatively impact the model’s performance.

Conclusion and Outlook

In this paper, a novel state-of-the-art deep learning model was proposed by combining deep transfer learning and GLCM texture features to improve the performance of the model. The general knowledge extracted from the state-of-the-art model in the general domain was transferred to the construction domain. Additionally, GLCM-based texture features were used to develop a model to classify material conditions. The experimental models considered to compare the performance of the proposed model were chosen based on the widely used approaches to addressing data limitations.

We initialized the parameters of the transferred layer to extract the feature maps and derived the spatial relations of an image to concatenate to capture both feature maps (convoluted features, image patterns) and texture granularities (pixel patterns). The transferred features and the texture features improved the accuracy of material classification. The results obtained using images from a real construction site demonstrate the effectiveness of the proposed model. The overall classification accuracy of the proposed model yielded promising results for the limited (208 images) and very small (70 images) data sets (95% and 71% overall accuracy for limited and very small data sets, respectively) and outperformed the other model configurations using CNN, super learners, and transfer learning. When taking a closer look at the performance of the models for each material condition by calculating the precision, recall, and score, the proposed model outperformed the other combinations by at least 10%. Although the proposed model attained promising results in the case study, future work could include additional material conditions and devising a sensitivity control that would allow the application of the proposed model for understanding the detailed state of a complex construction site.

Data Availability Statement

All data that support the findings of this study are available in an online repository (Mengiste et al. 2023). All models and code generated and used during this study are available from the corresponding author upon reasonable request.

Acknowledgments

This work has benefited from the collaboration with the New York University Abu Dhabi (NYUAD) Center for Interacting Urban Networks (CITIES). The authors would like to thank William Fulton and Mohamed Rasheed from the Campus Planning and Projects Office at NYUAD for their support and for allowing access to ongoing projects on campus. The algorithms developed in this study used the high-performance computing (HPC) and research computing services from NYUAD’s Center for Research Computing.

References

Abraham, J. B. 2019. “Plasmodium detection using simple CNN and clustered GLCM features.” Preprint, submitted February 27, 2018. https://arxiv.org/abs/1909.13101.

Akinosho, T. D., L. O. Oyedele, M. Bilal, A. O. Ajayi, M. D. Delgado, O. O. Akinade, and A. A. Ahmed. 2020. “Deep learning in the construction industry: A review of present status and future innovations.” J. Build. Eng. 32 (Nov): 101827. https://doi.org/10.1016/j.jobe.2020.101827.

Aswiga, R. V., R. Aishwarya, and A. P. Shanthi. 2021. “Augmenting transfer learning with feature extraction techniques for limited breast imaging datasets.” J. Digital Imaging 34 (3): 618–629. https://doi.org/10.1007/s10278-021-00456-z.

Baduge, S. K., S. Thilakarathna, J. S. Perera, M. Arashpour, P. Sharafi, B. Teodosio, A. Shringi, and P. Mendis. 2022. “Artificial intelligence and smart vision for building and construction 4.0: Machine and deep learning methods and applications.” Autom. Constr. 141 (Sep): 104440. https://doi.org/10.1016/j.autcon.2022.104440.

Baek, F., S. Park, and H. Kim. 2019. “Data augmentation using adversarial training for construction-equipment classification.” Preprint, submitted February 27, 2019. http://arxiv.org/abs/1911.11916.

Bozinovski, S. 2020. “Reminder of the first paper on transfer learning in neural networks, 1976.” Informatica 44 (3): 291–302. https://doi.org/10.31449/inf.v44i3.2828.

Braun, A., and A. Borrmann. 2019. “Combining inverse photogrammetry and BIM for automated labeling of construction site images for machine learning.” Autom. Constr. 106 (Oct): 102879. https://doi.org/10.1016/j.autcon.2019.102879.

Chen-McCaig, Z., R. Hoseinnezhad, and A. Bab-Hadiashar. 2017. “Convolutional neural networks for texture recognition using transfer learning.” In Proc., 2017 Int. Conf. on Control, Automation and Information Sciences, ICCAIS, 187–192. New York: IEEE. https://doi.org/10.1109/ICCAIS.2017.8217573.

Conners, R. W., and C. A. Harlow. 1980. “Toward a structural textural analyzer based on statistical methods.” Comput. Graphics Image Process. 12 (3): 224–256. https://doi.org/10.1016/0146-664X(80)90013-1.

Dai, D., T. Xu, X. Wei, G. Ding, Y. Xu, J. Zhang, and H. Zhang. 2020. “Using machine learning and feature engineering to characterize limited material datasets of high-entropy alloys.” Comput. Mater. Sci. 175 (Apr): 109618. https://doi.org/10.1016/j.commatsci.2020.109618.

Dais, D., İ. E. Bal, E. Smyrou, and V. Sarhosis. 2021. “Automatic crack classification and segmentation on masonry surfaces using convolutional neural networks and transfer learning.” Autom. Constr. 125 (May): 103606. https://doi.org/10.1016/j.autcon.2021.103606.

Das, R., M. Arshad, P. K. Manjhi, and S. D. Thepade. 2020. “Improved feature generalization in smaller datasets with early feature fusion of handcrafted and automated features for content based image classification.” In Proc., 11th Int. Conf. on Computing, Communication and Networking Technologies, ICCCNT 2020. New York: IEEE. https://doi.org/10.1109/ICCCNT49239.2020.9225439.

Davis, P., F. Aziz, M. T. Newaz, W. Sher, and L. Simon. 2021. “The classification of construction waste material using a deep convolutional neural network.” Autom. Constr. 122 (Feb): 103481. https://doi.org/10.1016/J.AUTCON.2020.103481.

Durgamahanthi, V., J. Anita Christaline, and A. Shirly Edward. 2021. “GLCM and GLRLM based texture analysis: Application to brain cancer diagnosis using histopathology images.” Adv. Intell. Syst. Comput. 1172 (Mar): 691–706. https://doi.org/10.1007/978-981-15-5566-4_61/TABLES/5.

El merabet, Y., Y. Ruichek, and A. El idrissi. 2019. “Attractive-and-repulsive center-symmetric local binary patterns for texture classification.” Eng. Appl. Artif. Intell. 78 (Mar): 158–172. https://doi.org/10.1016/j.engappai.2018.11.011.

Fang, W., L. Ding, P. E. D. Love, H. Luo, H. Li, F. Peña-Mora, B. Zhong, and C. Zhou. 2020a. “Computer vision applications in construction safety assurance.” Autom. Constr. 110 (Feb): 103013. https://doi.org/10.1016/j.autcon.2019.103013.

Fang, W., P. E. D. Love, H. Luo, and L. Ding. 2020b. “Computer vision for behaviour-based safety in construction: A review and future directions.” Adv. Eng. Inf. 43 (Jan): 100980. https://doi.org/10.1016/j.aei.2019.100980.

Feurer, M., and F. Hutter. 2019. Hyperparameter optimization, 3–33. Cham, Switzerland: Springer.

Ghalati, M. K., A. Nunes, H. Ferreira, P. Serranho, and R. Bernardes. 2022. “Texture analysis and its applications in biomedical imaging: A survey.” IEEE Rev. Biomed. Eng. 15 (Sep): 222–246. https://doi.org/10.1109/RBME.2021.3115703.

Google Cloud. 2022. “Advanced guide to inception v3.” Accessed November 20, 2022. https://cloud.google.com/tpu/docs/inception-v3-advanced.

Hamledari, H., B. McCabe, and S. Davari. 2017. “Automated computer vision-based detection of components of under-construction indoor partitions.” Autom. Constr. 74 (Feb): 78–94. https://doi.org/10.1016/j.autcon.2016.11.009.

Heikkilä, M., M. Pietikäinen, and C. Schmid. 2009. “Description of interest regions with local binary patterns.” Pattern Recognit. 42 (3): 425–436. https://doi.org/10.1016/j.patcog.2008.08.014.

Hoang, N. D. 2019. “Automatic detection of asphalt pavement raveling using image texture based feature extraction and stochastic gradient descent logistic regression.” Autom. Constr. 105 (Sep): 102843. https://doi.org/10.1016/j.autcon.2019.102843.

Hoang, N. D. 2020. “Image processing-based pitting corrosion detection using metaheuristic optimized multilevel image thresholding and machine-learning approaches.” Math. Probl. Eng. 2020 (May): 1–19. https://doi.org/10.1155/2020/6765274.

Huang, D., C. Shan, M. Ardabilian, Y. Wang, and L. Chen. 2011. “Local binary patterns and its application to facial image analysis: A survey.” IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 41 (6): 765–781. https://doi.org/10.1109/TSMCC.2011.2118750.

Inoue, H. 2018. “Data augmentation by pairing samples for images classification.” Preprint, submitted April 23, 2018. http://arxiv.org/abs/1801.02929.

Jain, A. K., and F. Farrokhnia. 1991. “Unsupervised texture segmentation using Gabor filters.” Pattern Recognit. 24 (12): 1167–1186. https://doi.org/10.1016/0031-3203(91)90143-S.

Kalidindi, S. R. 2020. “Feature engineering of material structure for AI-based materials knowledge systems.” J. Appl. Phys. 128 (Jul): 41103. https://doi.org/10.1063/5.0011258.

Kandel, I., M. Castelli, and L. Manzoni. 2022. “Brightness as an augmentation technique for image classification.” Emer. Sci. J. 6 (4): 881–892. https://doi.org/10.28991/ESJ-2022-06-04-015.

Keras Team. 2015. “TensorFlow Keras preprocessing Image data generator.” Accessed November 21, 2022. https://www.tensorflow.org/api_docs/python/tf/keras/preprocessing/image/ImageDataGenerator.

Khallaf, R., and M. Khallaf. 2021. “Classification and analysis of deep learning applications in construction: A systematic literature review.” Autom. Constr. 129 (Sep): 103760. https://doi.org/10.1016/j.autcon.2021.103760.

Kim, B., N. Yuvaraj, K. R. Sri Preethaa, and R. Arun Pandian. 2021. “Surface crack detection using deep learning with shallow CNN architecture for enhanced computation.” Neural Comput. Appl. 33 (15): 9289–9305. https://doi.org/10.1007/s00521-021-05690-8.

Liang, D., F. Yang, T. Zhang, and P. Yang. 2018. “Understanding mixup training methods.” IEEE Access 6 (Sep): 58774–58783. https://doi.org/10.1109/ACCESS.2018.2872698.

López de la Rosa, F., J. L. Gómez-Sirvent, R. Sánchez-Reolid, R. Morales, and A. Fernández-Caballero. 2022. “Geometric transformation-based data augmentation on defect classification of segmented images of semiconductor materials using a ResNet50 convolutional neural network.” Expert Syst. Appl. 206 (Nov): 117731. https://doi.org/10.1016/J.ESWA.2022.117731.

Mahmoodzadeh, A., H. R. Nejati, and M. Mohammadi. 2022. “Optimized machine learning modelling for predicting the construction cost and duration of tunnelling projects.” Autom. Constr. 139 https://doi.org/10.1016/j.autcon.2022.104305.

Mengiste, E., B. G. de Soto, and T. Hartmann. 2022. “Recognition of the condition of construction materials using small datasets and handcrafted features.” J. Inf. Technol. Construct. 27 (46): 951–971. https://doi.org/10.36680/J.ITCON.2022.046.

Mengiste, E., K. R. Mannem, S. A. Prieto, and B. Garcia de Soto. 2023. “Dataset of images for model development using transfer-learning and texture features recognition of the conditions of construction materials with small datasets.” Accessed May 17, 2023. https://data.mendeley.com/datasets/zv66h54b8j/1.

Moreno-Barea, F. J., F. Strazzera, J. M. Jerez, D. Urda, and L. Franco. 2018. “Forward noise adjustment scheme for data augmentation.” In Proc., 2018 IEEE Symp. Series on Computational Intelligence (SSCI), 728–734. New York: IEEE.

Mostafa, K., and T. Hegazy. 2021. “Review of image-based analysis and applications in construction.” Autom. Constr. 122 (Feb): 103516. https://doi.org/10.1016/j.autcon.2020.103516.

Mutlag, W. K., S. K. Ali, Z. M. Aydam, and B. H. Taher. 2020. “Feature extraction methods: A review.” J. Phys. Conf. Ser. 1591 (1): 012028. https://doi.org/10.1088/1742-6596/1591/1/012028.

Nalepa, J., M. Marcinkiewicz, and M. Kawulok. 2019. “Data augmentation for brain-tumor segmentation: A review.” Front. Comput. Neurosci. 13 (Dec): 83. https://doi.org/10.3389/fncom.2019.00083.

Ng, H. W., V. D. Nguyen, V. Vonikakis, and S. Winkler. 2015. “Deep learning for emotion recognition on small datasets using transfer learning.” In Proc., 2015 ACM Int. Conf. on Multimodal Interaction (ICMI), 443–449. New York: Association for Computing Machinery. https://doi.org/10.1145/2818346.2830593.

Nguyen, H., and N.-D. Hoang. 2022. “Computer vision-based classification of concrete spall severity using metaheuristic-optimized extreme gradient boosting machine and deep convolutional neural network.” Autom. Constr. 140 (Mar): 104371. https://doi.org/10.1016/j.autcon.2022.104371.

Ogunseiju, O. R., J. Olayiwola, A. A. Akanmu, and C. Nnaji. 2021. “Recognition of workers’ actions from time-series signal images using deep convolutional neural network.” Smart Sustainable Built Environ. 11 (4): 812–831. https://doi.org/10.1108/SASBE-11-2020-0170/FULL/PDF.

Ojala, T., M. Pietikäinen, and T. Mäenpää. 2002. “Multiresolution gray-scale and rotation invariant texture classification with local binary patterns.” IEEE Trans. Pattern Anal. Mach. Intell. 24 (7): 971–987. https://doi.org/10.1109/TPAMI.2002.1017623.

Ottoni, A. L. C., R. M. de Amorim, M. S. Novo, and D. B. Costa. 2022. “Tuning of data augmentation hyperparameters in deep learning to building construction image classification with small datasets.” Int. J. Mach. Learn. Cybern. 14 (1): 171–186. https://doi.org/10.1007/s13042-022-01555-1.

Öztürk, Ş., and B. Akdemir. 2018. “Application of feature extraction and classification methods for histopathological image using GLCM, LBP, LBGLCM, GLRLM and SFTA.” Procedia Comput. Sci. 132 (Jan): 40–46. https://doi.org/10.1016/j.procs.2018.05.057.

Pal, A., and S. H. Hsieh. 2021. “Deep-learning-based visual data analytics for smart construction management.” Autom. Constr. 131 (Nov): 103892. https://doi.org/10.1016/j.autcon.2021.103892.

Patlolla, D. R., S. Voisin, H. Sridharan, and A. M. Cheriyadat. 2012. “GPU accelerated textons and dense sift features for human settlement detection from high-resolution satellite imagery.” In Proc., 13th Int. Conf. on GeoComputation, 1–7. Richardson, TX: Univ. of Texas at Dallas.

Prasad, G., G. S. Vijay, and R. Kamath. 2022. “Comparative study on classification of machined surfaces using ML techniques applied to GLCM based image features.” Mater. Today Proc. 62 (Jan): 1440–1445. https://doi.org/10.1016/j.matpr.2022.01.285.

Rassem, T. H., and B. E. Khoo. 2014. “Completed local ternary pattern for rotation invariant texture classification.” Sci. World J. 2014 (Apr). https://doi.org/10.1155/2014/373254.

Shang, X., Y. Xu, L. Qi, A. H. Madessa, and J. Dong. 2018. “An evaluation of convolutional neural networks on material recognition.” In Proc., 2017 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computed, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), 1–6. New York: IEEE. https://doi.org/10.1109/UIC-ATC.2017.8397467.

Shorten, C., and T. M. Khoshgoftaar. 2019. “A survey on image data augmentation for deep learning.” J. Big Data 6 (1): 1–48. https://doi.org/10.1186/s40537-019-0197-0.

Simon, P., and V. Uma. 2020. “Deep learning based feature extraction for texture classification.” Procedia Comput. Sci. 171 (Jan): 1680–1687. https://doi.org/10.1016/j.procs.2020.04.180.

Singh, A., R. K. Sunkaria, and A. Kaur. 2022. “A review on local binary pattern variants.” In Proc., 1st Int. Conf. on Computational Electronics for Wireless Communications: ICCWC 2021, 545–552. Berlin: Springer. https://doi.org/10.1007/978-981-16-6246-1_46/TABLES/1.

Singh, S., D. Srivastava, and S. Agarwal. 2017. “GLCM and its application in pattern recognition.” In Proc., 5th Int. Symp. on Computational and Business Intelligence, ISCBI 2017, 20–25. New York: IEEE. https://doi.org/10.1109/ISCBI.2017.8053537.

Soille, P. 2002. Morphological texture analysis: An introduction, 215–237. Berlin: Springer.

Sthevanie, F., and K. N. Ramadhani. 2018. “Spoofing detection on facial images recognition using LBP and GLCM combination.” J. Phys. Conf. Ser. 971 (1): 012014. https://doi.org/10.1088/1742-6596/971/1/012014.

Su, C., and W. Wang. 2020. “Concrete cracks detection using convolutional neuralnetwork based on transfer learning.” Math. Probl. Eng. 2020 (Aug): 7240129. https://doi.org/10.1155/2020/7240129.

Summers, C., and M. J. Dinneen. 2018. “Improved mixed-example data augmentation.” In Proc., 2019 IEEE Winter Conf. on Applications of Computer Vision, WACV 2019, 1262–1270. New York: IEEE. https://doi.org/10.48550/arxiv.1805.11272.

Szegedy, C., V. Vanhoucke, S. Ioffe, J. Shlens, and Z. Wojna. 2016. “Rethinking the inception architecture for computer vision.” In Proc., IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, 2818–2826. New York: IEEE.

Varma, M., and A. Zisserman. 2009. “A statistical approach to material classification using image patch exemplars.” IEEE Trans. Pattern Anal. Mach. Intell. 31 (11): 2032–2047. https://doi.org/10.1109/TPAMI.2008.182.

Wang, K., et al. 2017. “Generative adversarial networks: Introduction and outlook.” IEEE/CAA J. Autom. Sin. 4 (4): 588–598. https://doi.org/10.1109/JAS.2017.7510583.

Wang, W., W. Hu, W. Wang, X. Xu, M. Wang, Y. Shi, S. Qiu, and E. Tutumluer. 2021. “Automated crack severity level detection and classification for ballastless track slab using deep convolutional neural network.” Autom. Constr. 124 (Apr): 103484. https://doi.org/10.1016/j.autcon.2020.103484.

Wang, Y., P. C. Liao, C. Zhang, Y. Ren, X. Sun, and P. Tang. 2019. “Crowdsourced reliable labeling of safety-rule violations on images of complex construction scenes for advanced vision-based workplace safety.” Adv. Eng. Inf. 42 (Oct): 101001. https://doi.org/10.1016/j.aei.2019.101001.

Wang, Y., and J. Su. 2014. “Automated defect and contaminant inspection of HVAC duct.” Autom. Constr. 41 (May): 15–24. https://doi.org/10.1016/j.autcon.2014.02.001.

Yang, B., K. Xu, H. Wang, and H. Zhang. 2022. “Random transformation of image brightness for adversarial attack.” J. Intell. Fuzzy Syst. 42 (3): 1693–1704. https://doi.org/10.3233/JIFS-211157.

Yang, Q., W. Shi, J. Chen, and W. Lin. 2020. “Deep convolution neural network-based transfer learning method for civil infrastructure crack detection.” Autom. Constr. 116 (Aug): 103199. https://doi.org/10.1016/j.autcon.2020.103199.

Yu, F., A. Seff, Y. Zhang, S. Song, T. Funkhouser, and J. Xiao. 2015. “LSUN: Construction of a large-scale image dataset using deep learning with humans in the loop.” Preprint, submitted June 10, 2015. http://arxiv.org/abs/1506.03365.

Zheng, Z., Z. Zhang, and W. Pan. 2020. “Virtual prototyping- and transfer learning-enabled module detection for modular integrated construction.” Autom. Constr. 120 (Dec): 103387. https://doi.org/10.1016/j.autcon.2020.103387.

Zhu, S., C. Guo, and Y. Wang. 2005. “What are Textons?” Int. J. Comput. Vision 62 (1–2): 121–143. https://doi.org/10.1007/s11263-005-4638-1.

Information & Authors

Information

Published In

Copyright

This work is made available under the terms of the Creative Commons Attribution 4.0 International license, https://creativecommons.org/licenses/by/4.0/.

History

Received: Apr 27, 2023

Accepted: Jul 11, 2023

Published online: Sep 25, 2023

Published in print: Jan 1, 2024

Discussion open until: Feb 25, 2024

ASCE Technical Topics:

- Artificial intelligence and machine learning

- Computer programming

- Computing in civil engineering

- Construction engineering

- Construction management

- Construction materials

- Education

- Engineering fundamentals

- Engineering materials (by type)

- Information management

- Materials engineering

- Materials processing

- Mathematical functions

- Mathematics

- Matrix (mathematics)

- Model accuracy

- Models (by type)

- Neural networks

- Practice and Profession

- Training

Authors

Metrics & Citations

Metrics

Citations

Download citation

If you have the appropriate software installed, you can download article citation data to the citation manager of your choice. Simply select your manager software from the list below and click Download.