| Part I | Gender | 1. What is your gender? | A Male; B Female | — |

| Age | 2. What is your age? | A ; B 19–25; C 26–30; D 31–40 E 41–50; F 51–60; G 61 and over |

| Educational level | 3. What is your level of education? | A Junior middle school or below; B Senior high school; C Vocational college; D Undergraduate; E Postgraduate |

| Qualified to drive or not | 4. Do you hold a valid driver’s license? | A Yes; B No |

| Driving years | 5. How many years of actual driving experience do you have? | A None; B 1 year or less; C 1–3 years; D 3–5 years; E 5 years or more |

| Knowledge related to AVs | 6. What do you think is the highest level of technology for AVs currently in use in the market? | A Level 1, supported driving; B Level 2, partially automated; C Level 3, conditionally automated; D Level 4, highly automated; E Level 5, fully automated |

| 7. In order to match the technology of autonomous driving with the right features, which of the following options would be the most appropriate to choose? | A Emergency braking; B Constant speed cruise control; C Lane departure warning; D Automatic lane change; E Congestion following; F Adaptive cruise control; G Automatic lane keeping; H Highway automatic driving; I Highway automatic navigation |

| The functions included in the Level 1: Driver assistance. |

| The functions included in the Level 2: Partial driving automation. |

| The functions included in the Level 3: Conditional driving automation. |

| The functions included in the Level 4: High driving automation. |

| The functions included in the Level 5: Full driving automation. |

| Part II | Perceived usefulness | 8. In order to accurately evaluate the usefulness characteristics of AVs, which of the following options would be the most appropriate to choose? | A Very satisfied; B Satisfied; C General; D Unsatisfied; E Very unsatisfied | Adapted from Davis et al. (1989), Bansal et al. (2016), Shin and Managi (2017), and Kaur and Rampersad (2018) |

| AVs reduce traffic congestion and improve travel efficiency. |

| AVs reduce the burden of driving stress, such as lane change reminders and fixed speed cruise functions. Self-driving features free up hands and allow temporary checking of cell phone information. AVs reduce driving difficulties with self-parking and auto-following. |

| | Perceived ease of use | 9. In order to accurately evaluate the ease of use characteristics of AVs, which of the following options would be the most appropriate to choose? | | |

| Automatic driving function classification is reasonable. |

| The operation process of AVs is simplified. |

| The voice reminders of AVs are timely. |

| The operation manuals of AVs are clearly explained. |

| | Behavioral attitudes | 10. In response to the convenience that AVs bring to you, what is your attitude toward high-level AVs? | A Strongly agree; B Agree; C Neutral attitude; D Disagree; E Strongly disagree | Adapted from Ajzen (1991), Gold et al. (2015), and Acheampong and Cugurullo (2019) |

| 11. In response to recent news reports about accidents involving AVs, what is your attitude toward AVs? |

| 12. Experts verified that human operation is the main cause of recent accidents involving AVs. According to your operating level, what is your attitude toward AVs? |

| | Subjective norms | 13. Please rate the following subjective normative factors that influence your use and purchase of AVs. Which of the following options would be the most appropriate to choose? | A Very large; B Large; C Average; D Small; E No effect | |

| Financial subsidies and related policies. |

| Media advertising and celebrity endorsement. |

| The purchase and refusal behaviors of colleagues and others. |

| Friend and family member attitudes and support. |

| | Perceived behavioral control | 14. Suppose you have been using an AV for more than a year, and today you are driving to an unfamiliar city, using highways and city roads, and the weather is cloudy and rainy. Which score evaluates your ability to operate an automated driving system? | A 1 points; B 2 points; C 3 points; D 4 points; E 5 points | |

| 15. If you have a year’s driving experience with AVs, rate your ability to handle this situation when faced with a potential driving risk after you have transferred driving control to the automated driving system. |

| 16. For autonomous driving levels or autonomous driving functions that you are not familiar with, please rate your possible ability to operate the system after reviewing the manual for the autonomous driving system, based on your existing driving experience. | A Bold use; B Exploratory use; C Unable to judge; D Afraid to use; E Refuse to use |

| | HMRP | 17. If an AV is involved in an accident, which safety hazards do you think may have caused the accident? | A Human operation safety hazards; B Road environment safety hazards; C Vehicle system safety hazards; D Pedestrian interference; E Other safety hazards | Self-developed |

| | | 18. Currently, the technology level of AVs is increasing, and self-driving assistance functions are evolving. In this complex travel environment, which option expresses your idea of using an autonomous car in order to ensure safe travel? | A Choose AVs with high technology levels for travel; B Choose AVs with high technology levels and diverse functions for travel; C Choose to travel with AVs with diverse functions; D Choose an autonomous vehicle with diversified functions belonging to the same technical level; E After handing over control of the vehicle, decisions are made by the autonomous driving system without considering the technology and functions | |

| | | 19. For familiar driving vehicles, after the driver hands over control to the auxiliary driving system, which of the following options would be the most appropriate to choose? | A 1 points; B 2 points; C 3 points; D 4 points; E 5 points | |

| Drivers handing over driving control early or late. |

| The driver and the auxiliary system control vehicle operations at the same time. |

| In autonomous driving mode, the system continues to control the operation of the vehicle in the event of a decision conflict between the driver and the automated driving system. |

| Drivers no longer pay attention to the state of the road after handing over control because there is system judgment. |

| | Behavioral Intentions | 20. According to your needs, what is your intention to use and purchase a high-level AV? | A Willing to use; B Use; C Indecisive; D Don’t use it; E Never use | Adapted from Gold et al. (2015) |

| 21. After hearing news of traffic accidents, do you plan to use or buy an autonomous vehicle with L3 level or higher. | A Willing to buy; B Buy; C Hesitant; D Don’t buy but use; E Don’t buy, don’t use |

| 22. If you take formal training for an AV and improve your control ability through regular test rides, what is your intention to use and purchase a high-level AV? | A Willing to buy; B Buy; C Hesitant; D Don’t buy but use; E Don’t buy, don’t use |

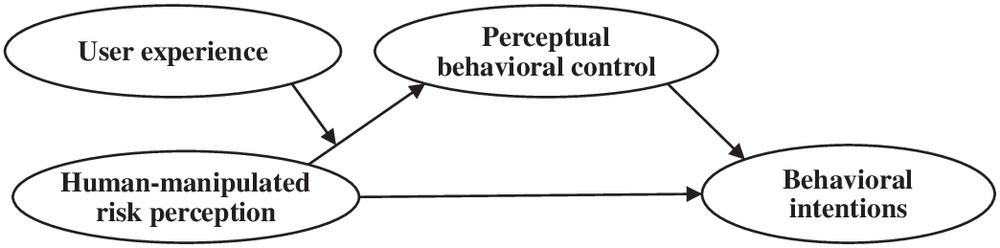

| Part III | User experience | 23. Based on the user experience, suppose you are using the latest Level 3 AV. Which of the following options would be the most appropriate to choose? Rainy day highway travel scenario. | A Autofollow; B Automatic lane change; C Fixed speed cruise control; D Automatic parking; E Deceleration and braking | Self-developed |

| On the way home after the weekend outing. |

| Parking on the road. |

| Traffic jams occur on urban roads when commuting on the working days. |

| 24. Based on the user experience, which of the following options would be the most appropriate to choose? | A Picture 1; B Picture 2; C Picture 3; D Picture 4; E Picture 5 |

| City roads to work |

| Expressway |

| Suburban roads |

| Parking lot parking |

| Bad weather |

| Perceived behavioral control | 25. After a test drive and professional training, please rate how much you have improved your current handling of autopilot. Which score evaluates your ability to operate on autopilot? | A 1 points; B 2 points; C 3 points; D 4 points; E 5 points |

| Behavioral attitude | 26. After the user experience and professional training, your perception of AVs has changed. Which of the following options would be the most appropriate to choose? | A Strongly agree; B Agree; C Neutral attitude; D Disagree; E Strongly disagree |

| Behavioral intention | 27. After the user experience, your cognitive and maneuvering ability has improved. Which option appropriately expresses your thoughts about your intention to use and purchase an AV? | A Willing to buy; B Buy; C Hesitant; D Don’t buy but use; E Don’t buy, don’t use |