Journal of Water Resources Planning and Management’s Reproducibility Review Program: Accomplishments, Lessons, and Next Steps

Publication: Journal of Water Resources Planning and Management

Volume 150, Issue 8

Introduction

In November 2020, the editors of the Journal of Water Resources Planning and Management (JWRPM) launched a Reproducibility Review Program (Rosenberg et al. 2021). This initiative was inspired by studies showing a lack of reproducibility in water-related journals (Stagge et al. 2019), calls for better reviews of claims of reproducibility (Goodman et al. 2016), and similar review programs at journals in other domains (Rosenberg et al. 2021; Rosenberg and Watkins 2018). JWRPM’s voluntary program incentivizes authors to publish data, models, code, and directions with their articles so that an independent reviewer can replicate part or all of the authors’ work. The goal of the JWRPM Reproducibility Review Program is to promote a cultural shift toward making research more accessible and reproducible, thereby accelerating science and increasing impact. The program has five objectives (Rosenberg et al. 2021):

1.

Encourage authors to make their results more reproducible.

2.

Allow scientists and practitioners to more easily find and use reproducible work.

3.

Encourage further sharing and interaction between authors and readers.

4.

Recognize and reward authors who make their work more reproducible.

5.

Increase the impact of work published in the journal.

For more details, visit the Reproducibility Hub (https://ascelibrary.org/reprod) for products of the Reproducibility Review Program, including:

•

A description of the program philosophy and process,

•

A special collection of all successfully reproduced manuscripts,

•

A list of annual reproducibility award recipients,

•

A description of Silver and Bronze badges awarded to papers with reproduced results and papers that share all data, models, code, and directions for use, and

•

An online form where people can sign up to be reproducibility reviewers to help reproduce results of papers submitted to the program.

In this editorial, we—JWRPM’s associate editors for reproducibility (AERs)—share program accomplishments, challenges and lessons learned, and next steps to expand the pilot program to other ASCE journals. We also share our experience as a potential model to foster more reproducible and open research products, set forth as a goal by research sponsors and government agencies (Burgelman et al. 2019; European Commission et al. 2020; Nelson 2022; National Science Foundation and Institute of Education Sciences, US Department of Education 2018).

Accomplishments

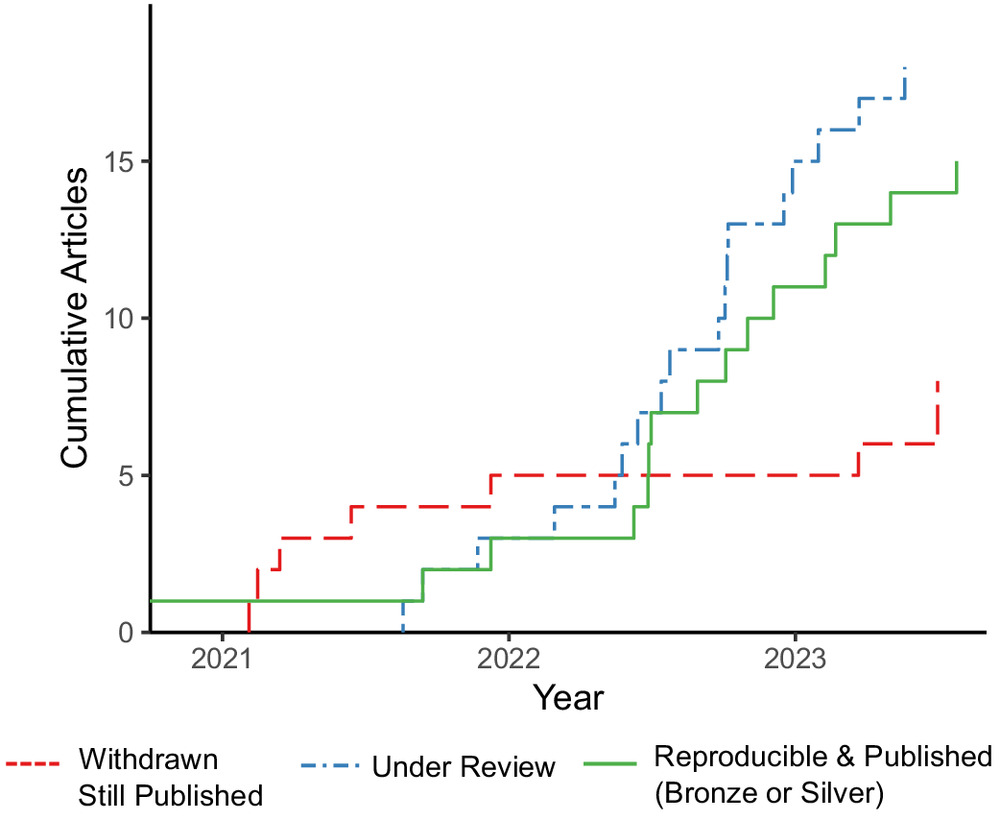

JWRPM’s Reproducibility Review Program is already successfully addressing its five initial objectives, and the program continues to build momentum. Motivating authors to make their work more reproducible (Objective 1) can be measured by participation in the program (Fig. 1). Since its inception, approximately 3 years ago in November 2020, the program has awarded 10 Silver badges for manuscripts where the reviewers could reproduce all or part of the study’s results, and three Bronze badges for manuscripts that provided research artifacts (Table 1). An additional 11 manuscripts are currently in various stages of reproducibility review. Furthermore, the authors seven manuscripts elected to withdraw from the Reproducibility Review Program after an initial reproducibility review, but these manuscripts were ultimately published as traditional articles. Excluding manuscripts still in review or declined for technical reasons, 65% (13 of 20 manuscripts) were successfully reproduced by reviewers. These manuscripts are not representative of all JWRPM manuscripts because the authors self-selected by applying to the voluntary program. From November 2020 to July 2023, 557 articles were published by JWRPM, making the 13 reproduced articles an exclusive group (Bastidas Pacheco et al. 2023; Cordeiro et al. 2022; Hadjimichael et al. 2023; Jander et al. 2023; Jaramillo and Saldarriaga 2023; Morgan and Lane 2022; Obringer et al. 2022; Rasmussen et al. 2023; Rodríguez-Martínez et al. 2023; Thomas and Sela 2023; Tran et al. 2023; Vrachimis et al. 2022; Wang and Rosenberg 2023). We intend to further increase the proportion of JWRPM articles successfully handled by the JWRPM Reproducibility Review Program.

| Article status | Number of articles (November 2020 to November 2023) |

|---|---|

| Published, Silver reproducibility | 10 |

| Published, Bronze reproducibility | 3 |

| In review or revision | 11 |

| Published, but withdrew from reproducibility | 7 |

| Declined for technical review | 31 |

| Total | 62 |

A major motivator for authors to participate in the program is the offer of free open access without author publishing charges (APCs) for papers receiving the Silver reproducibility badge—a significant $2,500 savings as of this writing. ASCE Publications and the Environmental and Water Resources Institute (EWRI) donated $40,000 and $20,000, respectively, to support free open access publishing. The 11 papers that met the Silver badge reproducibility criteria were awarded free open access publication. Offering open access publication with no APCs helps meet Objective 4, recognize and reward authors who make their work more reproducible. Furthermore, JWRPM implemented annual awards to recognize and reward authors (Outstanding Effort to Make Results More Reproducible) as well as editors (Outstanding Effort to Reproduce Results). Papers that meet the Silver and Bronze criteria are awarded reproducibility badges, and JWRPM created a special collection page designed to drive readers to reproduced papers. Increased research visibility helps reward authors who make additional efforts for reproducibility.

Objectives 2, 3, and 5 all describe increased research impact in various forms. Three years is too soon to detect an increase in impact in citations or whether new research is building on successfully reproduced manuscripts. In the coming years, we foresee an increased impact by publishing reproduced papers as open access because papers published open access are downloaded and cited at higher rates across multiple fields (McCabe and Snyder 2014; Ottaviani 2016; Piwowar et al. 2007, 2018).

Challenges and Lessons Learned

ASCE intends to expand the JWRPM Reproducibility Review Program to other ASCE journals. This section presents some challenges and lessons learned from JWRPM’s experience to demonstrate how other journals could implement similar programs.

Challenge 1: Better Incorporate Reproducibility into the Article Submission and Review Workflow

A technical challenge with the Reproducibility Review Program was incorporating reproducibility reviews into the existing paper submission and review process. Reproducibility reviews are handled in parallel with traditional technical and content reviews. This setup allows reproducibility reviews to be independent of technical reviews, and the reproducibility review does not affect a decision to publish. Most editorial management software is not designed to support two editors (one for content and one for reproducibility) and two parallel reviews. For JWRPM, this challenge was addressed by making the AER more senior in the editorial management software. In this workflow, the AER assigns a content editor based on the editor in chief’s instruction and then acts as a pass-through once the content reviews and editor decisions are made. This additional step requires coordination between editors and some additional training for AERs and associate editors. If the Reproducibility Review Program grows, it may become feasible to redesign online journal submission and review tools to accommodate parallel reproducibility reviews.

Challenge 2: Educate Authors about the Program

Because the Reproducibility Review Program is new, there has been a learning curve for authors regarding what the program entails and the journal’s expectations. Publications such as the original policy description (Rosenberg et al. 2021) and this editorial help to clarify the process. We also established the aforementioned Reproducibility Hub on the ASCE webpage, including resources and short videos created by the AERs and journal staff (Rosenberg et al. 2023). The AERs have given presentations about the Reproducibility Review Program at academic conferences. We expect the requirements and process of the Reproducibility Review Program to become clearer as it grows in popularity and expands to other ASCE journals.

Challenge 3: Concern about Publication Delays

Despite broadly positive responses from authors, the most common concern has been that opting into a reproducibility review may delay publication. Our goal has been to parallelize reproducibility reviews to not affect the time to a publication decision based on technical merit. Since the program’s inception, the JWRPM staff have iteratively revised the process to streamline reproducibility reviews. More recently, we have shifted the timing of reproducibility reviews to lessen reviewer burden and make the process more efficient. When the program began, reproducibility reviews were assigned when a manuscript was first sent for content review. We are currently piloting a change to begin reproducibility reviews only after receiving a positive first technical review. We are also evaluating publishing online manuscripts accepted on technical merit while the reproducibility review is finalized. These efforts can speed up the reproducibility review process, while also ensuring that reproducibility review effort is not expended for manuscripts that are unlikely to be published.

Challenge 4: Recruiting Reproducibility Editors and Reviewers

The program relies on volunteers to perform reproducibility reviews. JWRPM initially recruited 10 AERs, and these editors have actively solicited volunteers to act as reproducibility reviewers through conference presentations and an online signup program on the Reproducibility Hub. The journal created a new position of section editor for reproducibility to manage the program and AERs. The journal has also created a new role for people who review manuscripts with the aim to reproduce results. Further, if the reviewer chooses, their name can be included with the published article as the reproducibility reviewer for recognition. In response to concerns about reviewer turnaround time, the new approach to perform reproducibility reviews after initial technical review decreases the burden on reproducibility reviewers.

Challenge 5: Not All Papers Can Be Reproduced

The decision to make the program voluntary was predicated on an understanding that not all papers can be made reproducible due to restrictions on data privacy, computational requirements, the embargoing of sensitive data, or other reasons. Embargoing was a major concern within the paleoclimate community, particularly with regards to early career researchers publishing portions of their research with data before graduation or project completion (Kaufman and PAGES 2k Special-Issue Editorial Team 2017). Other potential barriers to reproduce results include time-intensive simulations or random number generation. Some of this issue with random number generation can be addressed by authors setting and publishing the random number seed within their code. Making the reproducibility program optional can motivate a cultural shift toward reproducibility without being unnecessarily prescriptive or onerous.

Challenge 6: Follow the Format for Papers with Reproduced Results

The journal set up the Reproducibility Review Program with requirements for how to format papers for submission to the program. First, articles with reproduced results must include a data availability section. Within the data availability statement, authors must cite a permanent digital object identifier (DOI) and public locator for the data, model, code, and directions used in the work. Second, articles must include a reproducible results section. The reproducible results section must list the name of a person not affiliated with the study who reproduced results prior to submission. The reproduced results section must also state which results were reproduced. For example:

Haley Canham (Utah State University) and an anonymous reproducibility reviewer downloaded all data and code and reproduced the results in the figures of this study (Morgan and Lane 2022).

These steps are important to help authors identify bugs, unclear directions, or other errors that prevented others from reproducing results prior to submission. Some papers submitted to the program did not have sections for data availability and/or reproduced results. Other papers failed to list a person not affiliated with the study who reproduced results prior to submission to the journal. Authors can now follow the examples of papers with reproduced results (e.g., Wang and Rosenberg 2023; Rodríguez-Martínez et al. 2023; Thomas and Sela 2023). We also created a checklist for steps to submit a manuscript to the reproducible results program (see the Reproducibility Hub).

Challenge 7: Difficulty to Reproduce Results for Work with Numerous Scripts or Manual Inputs

Journal AERs and reproducibility reviewers found it difficult to reproduce results for work that required a large number of different scripts to execute and/or workflows that required a lot of manual input. Human error challenged the reproducibility of these works. We now request authors follow a best practice of providing a single master script or “run-all” button that executes all code needed to reproduce figures and tables in the manuscript. A master script reduces human error and better documents the workflow in reproducible code. For an example, see Bastidas Pacheco et al. (2023).

Challenge 8: Difficulty to Recreate Run-Time Environments

Journal AERs and reproducibility reviewers sometimes found it difficult to recreate the exact run-time environment used by the authors. The run-time environment includes the versions of the software programming language, libraries, packages, models, and other dependencies. Use of different versions may break dependencies or potentially generate different results. This is commonly caused by ongoing updates after an author posts their code to a repository. Two best practices are:

1.

List the exact versions of all software, libraries, or packages the authors used. Then provide links and directions to where readers can find, download, and install the required version of each component.

2.

Bundle all required materials in a binding unit such as MyDocker or a web-hosted notebook.

See Bastidas Pacheco et al. (2023) for an example of both methods.

Next Steps

JWRPM intends to proceed with the Reproducibility Review Program, and editors will continue to advocate for the program. We aim to expand the number of published papers recognized for reproducibility. We also plan to hold training workshops to educate both potential reviewers and authors.

Currently, JWRPM is using external funding to publish successfully reproduced manuscripts as open access without author publishing charges. We hope to develop new funding sources and funding models to support this popular motivator. To our knowledge, JWRPM is the only journal with a business model that offers free open access as an incentive for successfully reproduced manuscripts. The journals ReScience C and ReScience X also offer open access and fully reproduced research, but they do not publish new research with an internal reproducibility review, instead publishing computational reproductions of studies published elsewhere. We commend ASCE for supporting this initiative and for plans to expand it to other journals in the ASCE portfolio. We encourage other academic journals and supporting organizations to adopt similar practices.

Our goal with this program continues to be to improve reproducibility within the water resources field. We have set and are achieving clear and attainable benchmarks. We are highlighting and rewarding authors that rise to the challenge to make their work reproducible. Papers with reproduced results are patterning best practices and helping shift our science and engineering culture toward reproducibility as the default when conducting analyses and submitting a manuscript.

Data Availability Statement

All data for this editorial are included in a Zenodo repository (Stagge et al. 2023). The code used to generate Fig. 1 is available in a Github repository (Stagge et al. 2023).

Reproducible Results

Kyungmin Sung and Irenee Munyejuru (Ohio State University) downloaded the Github repository, ran the code, and successfully reproduced Fig. 1 as presented here.

References

Bastidas Pacheco, C. J., J. S. Horsburgh, and N. A. Attallah. 2023. “Variability in consumption and end uses of water for residential users in Logan and Providence, Utah, US.” J. Water Resour. Plann. Manage. 149 (1): 05022014. https://doi.org/10.1061/(ASCE)WR.1943-5452.0001633.

Burgelman, J.-C., C. Pascu, K. Szkuta, R. Von Schomberg, A. Karalopoulos, K. Repanas, and M. Schouppe. 2019. “Open science, open data, and open scholarship: European policies to make science fit for the twenty-first century.” Front. Big Data 2 (Jun): 43. https://doi.org/10.3389/fdata.2019.00043.

Cordeiro, C., A. Borges, and M. R. Ramos. 2022. “A strategy to assess water meter performance.” J. Water Resour. Plann. Manage. 148 (2): 05021027. https://doi.org/10.1061/(ASCE)WR.1943-5452.0001492.

Di Matteo, M., G. C. Dandy, and H. R. Maier. 2017. “Multiobjective optimization of distributed stormwater harvesting systems.” J. Water Resour. Plann. Manage. 143 (6): 04017010. https://doi.org/10.1061/(ASCE)WR.1943-5452.0000756.

European Commission, Directorate-General for Research and Innovation, et al. 2020. Reproducibility of scientific results in the EU: Scoping report. Edited by W. Lusoli. Luxembourg, UK: Publications Office of the European Union. https://doi.org/10.2777/341654.

Goodman, S. N., D. Fanelli, and J. P. A. Ioannidis. 2016. “What does research reproducibility mean?” Sci. Transl. Med. 8 (341): 341ps12. https://doi.org/10.1126/scitranslmed.aaf5027.

Hadjimichael, A., J. Yoon, P. Reed, N. Voisin, and W. Xu. 2023. “Exploring the consistency of water scarcity inferences between large-scale hydrologic and node-based water system model representations of the Upper Colorado River Basin.” J. Water Resour. Plann. Manage. 149 (2): 04022081. https://doi.org/10.1061/JWRMD5.WRENG-5522.

Jander, V., S. Vicuña, O. Melo, and Á. Lorca. 2023. “Adaptation to climate change in basins within the context of the water-energy-food nexus.” J. Water Resour. Plann. Manage. 149 (11): 04023060. https://doi.org/10.1061/JWRMD5.WRENG-5566.

Jaramillo, A., and J. Saldarriaga. 2023. “Fractal analysis of the optimal hydraulic gradient surface in water distribution networks.” J. Water Resour. Plann. Manage. 149 (1): 04022074. https://doi.org/10.1061/(ASCE)WR.1943-5452.0001608.

Kaufman, D., and PAGES 2k Special-Issue Editorial Team. 2017. “Technical note: Open-paleo-data implementation pilot—The PAGES 2k special issue.” Clim. Past 14 (5): 593–600. https://doi.org/10.5194/cp-14-593-2018.

McCabe, M. J., and C. M. Snyder. 2014. “Identifying the effect of open access on citations using a panel of science journals.” Econ. Inq. 52 (4): 1284–1300. https://doi.org/10.1111/ecin.12064.

Morgan, B., and B. Lane. 2022. “Accounting for uncertainty in regional flow–ecology relationships.” J. Water Resour. Plann. Manage. 148 (4): 05022001. https://doi.org/10.1061/(ASCE)WR.1943-5452.0001533.

National Science Foundation and The Institute of Education Sciences, US Department of Education. 2018. Companion guidelines on replication & reproducibility in education research. A supplement to the common guidelines for education research and development, 1–10. Alexandria, VA: National Science Foundation.

Nelson, A. 2022. Memorandum for the heads of executive departments and agencies: Ensuring free, immediate, and equitable access to federally funded research. Washington, DC: Office of Science and Technology Policy. https://doi.org/10.21949/1528361.

Obringer, R., R. Nateghi, Z. Ma, and R. Kumar. 2022. “Improving the interpretation of data-driven water consumption models via the use of social norms.” J. Water Resour. Plann. Manage. 148 (12): 04022065. https://doi.org/10.1061/(ASCE)WR.1943-5452.0001611.

Ottaviani, J. 2016. “The post-embargo open access citation advantage: It exists (probably), it’s modest (usually), and the rich get richer (of course).” PLoS One 11 (8): e0159614. https://doi.org/10.1371/journal.pone.0159614.

Piwowar, H., J. Priem, V. Larivière, J. P. Alperin, L. Matthias, B. Norlander, A. Farley, J. West, and S. Haustein. 2018. “The state of OA: A large-scale analysis of the prevalence and impact of Open Access articles.” PeerJ 6 (Feb): e4375. https://doi.org/10.7717/peerj.4375.

Piwowar, H. A., R. S. Day, and D. B. Fridsma. 2007. “Sharing detailed research data is associated with increased citation rate.” PLoS One 2 (3): e308. https://doi.org/10.1371/journal.pone.0000308.

Rasmussen, D. J., R. E. Kopp, and M. Oppenheimer. 2023. “Coastal defense megaprojects in an era of sea-level rise: Politically feasible strategies or Army Corps fantasies?” J. Water Resour. Plann. Manage. 149 (2): 04022077. https://doi.org/10.1061/(ASCE)WR.1943-5452.0001613.

Rodríguez-Martínez, C., M. Quiñones-Grueiro, and O. Llanes-Santiago. 2023. “Cyberattack diagnosis in water distribution networks combining data-driven and structural analysis methods.” J. Water Resour. Plann. Manage. 149 (5): 04023013. https://doi.org/10.1061/JWRMD5.WRENG-5302.

Rosenberg, D. E., A. S. Jones, Y. Filion, R. Teasley, S. Sandoval-Solis, J. H. Stagge, A. Abdallah, A. Castronova, A. Ostfeld, and D. Watkins Jr. 2021. “Reproducible results policy.” J. Water Resour. Plann. Manage. 147 (2): 01620001. https://doi.org/10.1061/(ASCE)WR.1943-5452.0001368.

Rosenberg, D. E., J. Stagge, A. S. Jones, A. Abdallah, A. M. Castronova, and D. Compton. 2023. “Videos to make results more reproducible: ASCE Journal of Water Resources Planning and Management, HydroShare.” Accessed November 14, 2023. http://www.hydroshare.org/resource/ef64c9f4cd15413e8eca9590ac3bcf85.

Rosenberg, D. E., and D. W. Watkins Jr. 2018. “New policy to specify availability of data, models, and code.” J. Water Resour. Plann. Manage. 144 (9): 01618001. https://doi.org/10.1061/(ASCE)WR.1943-5452.0000998.

Stagge, J. H., D. E. Rosenberg, A. M. Abdallah, H. Akbar, N. A. Attallah, and R. James. 2019. “Assessing data availability and research reproducibility in hydrology and water resources.” Sci. Data 6 (1): 190030. https://doi.org/10.1038/sdata.2019.30.

Stagge, J. H., D. E. Rosenberg, A. M. Abdallah, A. M. Castronova, A. Ostfeld, and A. Spackman Jones. 2023. jstagge/jwrpm_editorial_2023: Version for Zenodo. Genève: Zenodo. https://doi.org/10.5281/ZENODO.10125823.

Thomas, M., and L. Sela. 2023. “MAGNets: Model reduction and aggregation of water networks.” J. Water Resour. Plann. Manage. 149 (2): 06022006. https://doi.org/10.1061/JWRMD5.WRENG-5486.

Tran, V., S. Helmrich, N. W. T. Quinn, and P. A. O’Day. 2023. “Operationalizing real-time monitoring data in simulation models using the public domain HEC-DSSVue software platform.” J. Water Resour. Plann. Manage. 149 (9): 06023004. https://doi.org/10.1061/JWRMD5.WRENG-5728.

Vrachimis, S. G., D. G. Eliades, R. Taormina, Z. Kapelan, A. Ostfeld, S. Liu, M. Kyriakou, P. Pavlou, M. Qiu, and M. M. Polycarpou. 2022. “Battle of the leakage detection and isolation methods.” J. Water Resour. Plann. Manage. 148 (12): 04022068. https://doi.org/10.1061/(ASCE)WR.1943-5452.0001601.

Wang, J., and D. E. Rosenberg. 2023. “Adapting Colorado River Basin depletions to available water to live within our means.” J. Water Resour. Plann. Manage. 149 (7): 04023026. https://doi.org/10.1061/JWRMD5.WRENG-5555.

Information & Authors

Information

Published In

Copyright

© 2024 American Society of Civil Engineers.

History

Received: Jan 24, 2024

Accepted: Jan 26, 2024

Published online: May 16, 2024

Published in print: Aug 1, 2024

Discussion open until: Oct 16, 2024

Authors

Metrics & Citations

Metrics

Citations

Download citation

If you have the appropriate software installed, you can download article citation data to the citation manager of your choice. Simply select your manager software from the list below and click Download.