Step 2: Framework Development Workshops

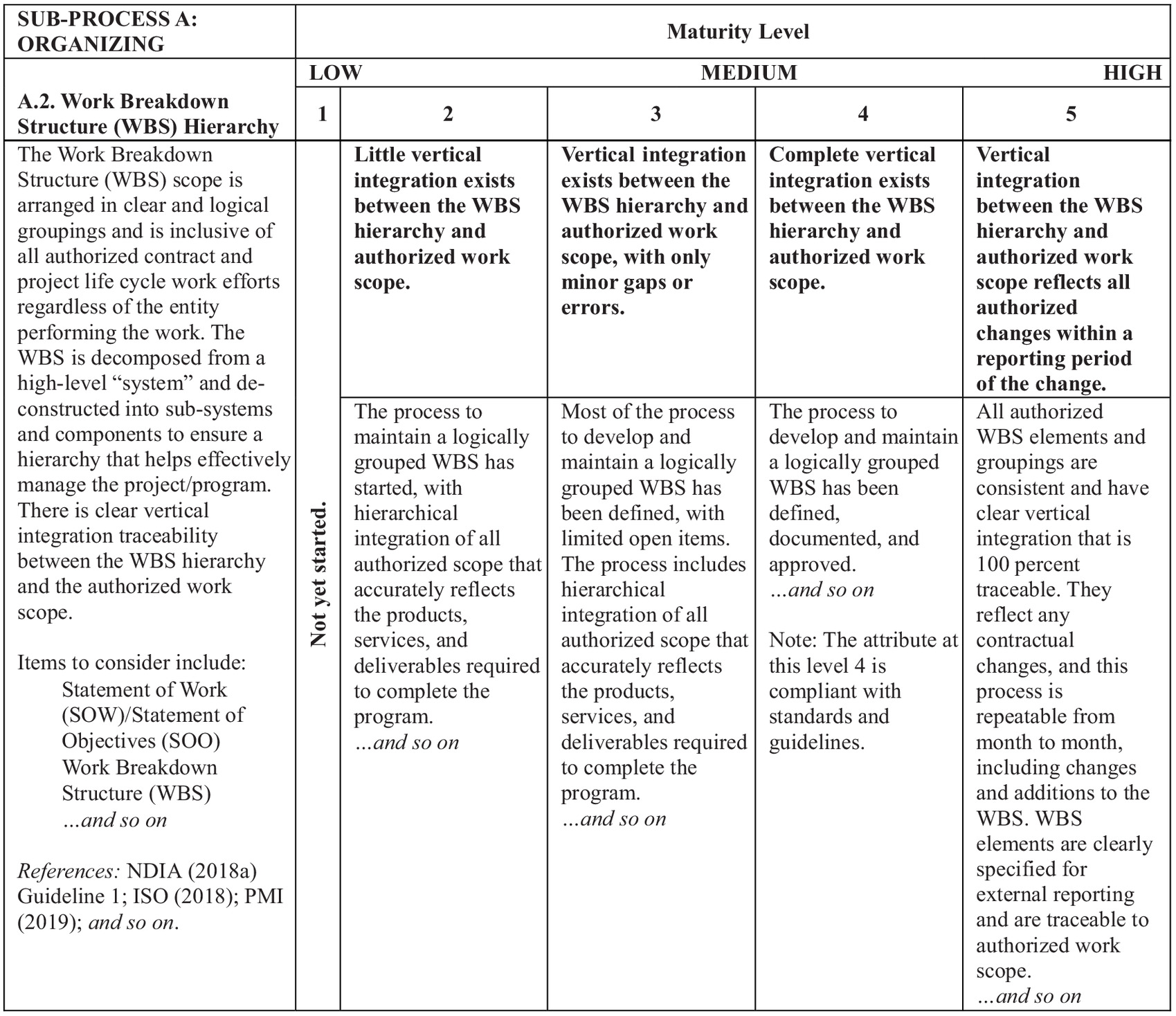

The authors hosted four workshops, where 56 EVMS practitioners (coincidentally) provided comments on each of the 56 maturity attribute tables (the maturity assessment framework). Furthermore, they assessed the attributes under each subprocess, then the ten subprocesses in order of importance by providing relative weights. These weights were needed to calculate the normalized weights of the 56 attributes and complete the scoresheets by developing scores to each maturity level per attribute. To engage the industry knowledge into the research, the workshops adopted the format of “research charrettes” from the literature, however, they were held virtually due to the challenges of the COVID-19 pandemic (

Gibson et al. 2022;

Gibson and Whittington 2010). A research charrette is a novel and efficient data collection approach proven effective in construction engineering and management research. It allows knowledge-sharing through real-time interactions between subject matter experts and academic researchers in a focus group setting. Key benefits include focused discussions, valuable data input and insights from industry participants, accelerated time in data collection, and so forth.

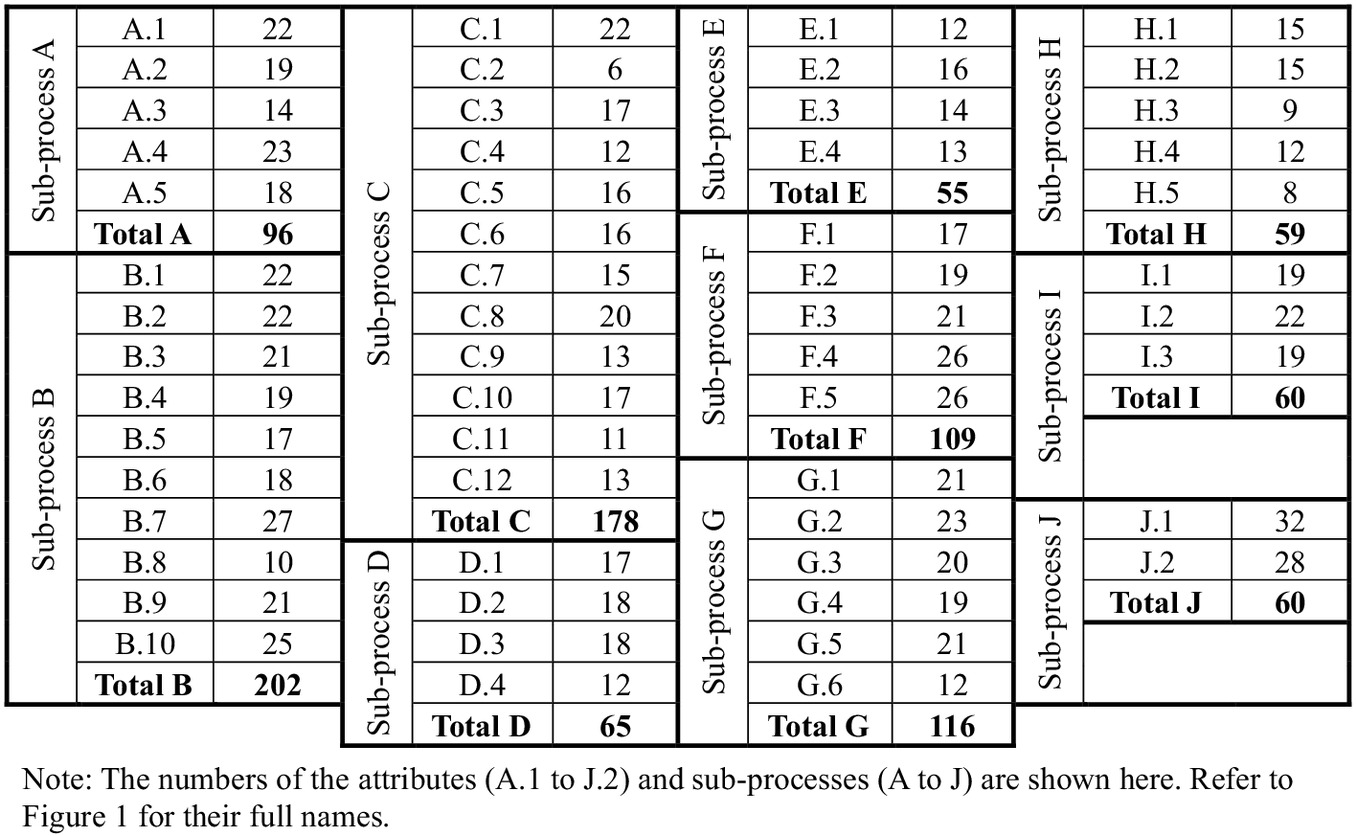

Based on the weights collected from the workshop participants, the maturity attribute level 5 scores were generated giving a maximum score of 1,000. The process for collecting and calculating the maturity level 5 scores based on the collected weights required several steps. First, the participants were asked to provide information about a project from their experience, and use this project as a reference (or anchor) when thinking about prioritizing the attributes and subprocesses. Then, they were asked to allocate 100 points among the x attributes making up each subprocess based upon their perception of the attribute’s relative impact on the subprocess maturity. For example, when weighing the attributes under subprocess A, if attribute A.1 is more important than A.2 to A.5, then they allocated more points to A.1. As an example, they could give A.1 a weight of 40, A.2 a weight of 20, A.3 a weight of 20, A.4 a weight of 10, and A.5 a weight of 10, with all adding to 100. They proceeded to do this for attributes within all the remaining nine subprocesses, always using their anchor project as a basis for their weighting decisions.

The participants were also asked to repeat the same exercise but instead weigh the subprocesses by allocating 100 points among the ten based upon their perception of each’s relative impact to the overall EVMS maturity, again using their anchor project as a personal reference. For example, if in the opinion of the respondent, subprocess A is more important than subprocesses B through J, then the respondent could allocate more points to A. As an example, they could allocate 25 points to subprocess A and distribute the remaining 75 points in a lesser amount to the other nine subprocesses, with all summing to 100.

Using this collective data from the 56 respondents, the authors calculated the attribute average weights and the subprocess average weights. As an example, A.1 (product-oriented work breakdown structure), one of the five attributes making up subprocess A, received an average weight of 22.8 out of 100 points within subprocess A (organizing); subprocess A (organizing), one of the ten subprocesses, received an average weight of 10.9 out of 100.

After calculating the average weights, the authors performed an outlier analysis in order to check the veracity of respondent input (

Aramali et al. 2022a;

Kwak and Kim 2017;

DeSimone et al. 2015). The authors identified extreme/outlier responses in the collected weights, which are data points lying far from the majority (

Kwak and Kim 2017;

DeSimone et al. 2015), at the subprocess level and at the attribute level. As a result, responses from five participants were identified as outliers. The remaining 51 data sets were used to calculate the level 5 maturity scores as briefly described in the next paragraph (

Aramali et al. 2022a).

After the outlier analysis was completed, several score calculation schemes were tried to develop the final level 5 relative weights for all attributes. The first two schemes were Scheme A and Scheme B. Scheme A was purely based on the weighting responses from the workshops, which had issues such as overinflating some of the individual attribute scores in the smaller subprocesses [Eq. (

1)]. Scheme B attempted to address this issue by adding a new multiplier entitled

attribute distribution factor (%) that took into account the number of attributes that make up each subprocess [Eq. (

2)]. This factor was calculated by dividing the number of attributes per subprocess by 56, multiplied by 100. However, a potential issue in Scheme B was that four subprocesses’ attribute scores were changed significantly compared to the workshop inputs, with differences greater than 50% compared to Scheme A scores. Therefore, the authors completed 101 different iterations moving between Schemes A and B by incrementally changing scores from Scheme A by 1%. The 52

nd scenario was the best fit, with 52%

Scheme A score and 48%

Scheme B score [Eq. (

3)]. Ultimately, this iteration was the best fit, where subprocess weights matched participant results in the workshops, while at the same time the attribute score inflation was minimized. More details of this methodology can be found in Aramali et al. (

2022a). The corresponding equations are as follows.

As an example, based on the workshop inputs with outliers removed, attribute A.1 had an

average weight of 22.6% within subprocess A. Subprocess A had an

average weight of 10.5% across all ten subprocesses. Therefore, attribute A.1’s normalized weighted score is 23.73, rounded to 24, out of 1,000 [result of Eq. (

1):

]. Then, since subprocess A has five attributes, therefore the

attribute distribution factor is 8.93% (result of

). In the same example of attribute A.1 mentioned earlier, the numerator of the Eq. (

2) is

; whereas the denominator is the sum of repeating this step of A.1 across all 56 attributes, producing 0.11048 (step to normalize the scores across all the 56 attributes). Therefore, the normalized weighted score in Scheme B for attribute A.1 is:

, rounded to 19. The final

attribute level 5 score represents 52%

Scheme A score and 48%

Scheme B score [Eq. (

3)]. In this case, the example of attribute A.1 continues with a final level 5 maturity score that is 21.55, rounded to 22, out of 1,000 (calculated as

). These steps were applied to all the attributes and the resulting scores are shown in the results section (specifically in Fig.

3).

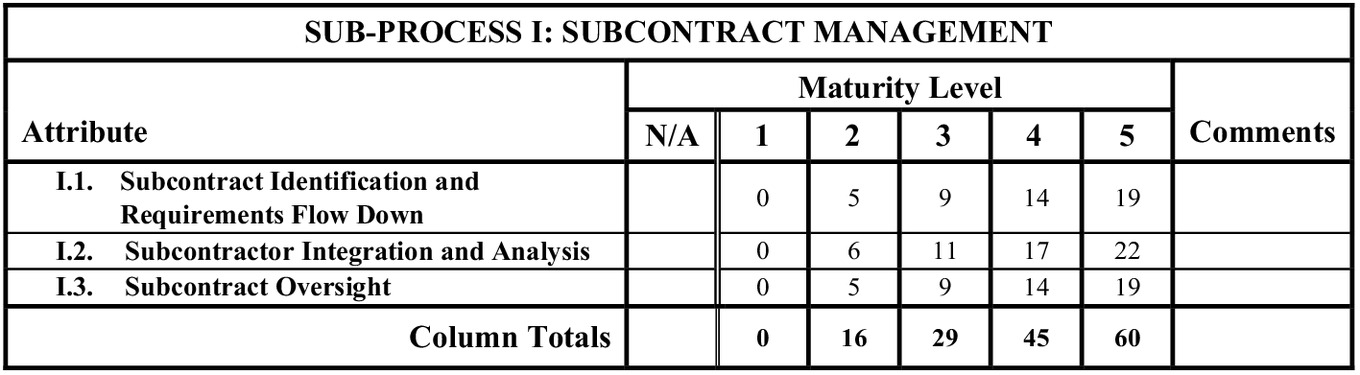

Linear interpolation was applied to generate the scores of the maturity levels 2, 3, and 4 for each attribute. The authors used an interpolation between the score of 0 for level 1 and the scores calculated earlier for level 5 (

Aramali et al. 2022a). Accordingly, the scores were finally filled in the scoresheets, which are used to assess each attribute when applied to a project or program. Also, since the application of EVMS is tailorable and dependent on project characteristics, the scoresheet allowed the option to specify attributes that are not applicable to the project being evaluated (

Van Der Steege 2019;

Bergerud 2017).

As previously mentioned, 56 individual practitioners participated in the framework development workshops. These are the individuals that did not yet test the framework but contributed to the development of the framework. They collectively represented 32 unique organizations and had an average of 19 years of industry experience. Other than having significant EVM and integrated project management experience for over 10 years and working for clients and contractors, there were no other specific criteria or selection process for individuals to participate in the workshops. The workshops were open to industry practitioners involved in project management and specifically EVMS implementation; the invitations were sent to leading organizations on the topic, through the help of the research team; and participation was voluntary. The majority represented government contractor organizations (41%), followed by government organizations (34%) and consultants (16%), and the remaining represented software developers and manufacturers (9%). Hence, a variety of perspectives were considered when establishing the framework. In terms of employment role, roughly 36% were in project controls management role, 21% in project management, 16% in compliance management, 14% in consulting, and the remaining 13% were mostly executives. Overall, the framework development input came from a diverse pool of individuals with a variety of expertise. In total, the workshop participants provided 859 comments which were resolved by the RT to produce the final version of the maturity assessment framework.

Step 3: Framework Testing and Data Collection

The individuals who participated in past workshops, as well as additional experts suggested by the RT were invited to four additional workshops to test the framework voluntarily on their completed projects. During these workshops, each participant was asked to select a completed reference project that they had been involved with in some capacity, collecting this data via a live Qualtrics survey as the workshop progressed. Then, the respondents were asked to provide background and completion data indicating project performance. Next, participants were asked to review the attribute tables with maturity level descriptions and rate the maturity level of the 56 attributes as applied on their projects at 20 percent project completion. This project lifecycle point in time was selected based on RT input and the literature, since EVMS is established or relatively matured at this point in a project lifecycle as described by Aramali et al. (

2022b). The selection of any maturity level allowed the authors to calculate its corresponding score. The score sum of the assessment of all attributes represents the overall EVMS maturity score for a given project (with a higher score meaning higher maturity). At the end of the workshop, the Qualtrics survey automatically generated a total EVMS maturity score for their projects (a raw score between 1 and 1,000 points). Overall, data from 35 completed projects provided by 31 workshop participants were collected and analyzed.

Based on the research procedures of Step 2 and Step 3, a total of eight workshops contributed to the development and testing of the novel EVMS maturity framework (four framework-development workshops plus four framework-testing workshops). The adopted workshop setting united subject matter experts with the researchers and the research sponsor and fostered interactive dialogue between them. This dialogue was helpful for various reasons. First, the researchers guided the participants and trained them specifically in using the framework step-by-step to provide the necessary numerical data or comments aligned with the research needs. For example, participants were familiarized with the framework before applying it to their anchor projects and programs. Second, the authors were available to clarify questions as the data collection proceeded. Third, with directly provided guidance from the researchers to the participants and real-time clarifications, bias in data inputs was minimized and consistency maintained across the workshops. Finally, and based on the statistical data that will be presented next, the authors and RT view the collected sample as sufficient to make statistically valid claims; however, as always and as in any sample of data, caution should be used in the generalization of the results to every single project outside of the sample.

Step 4: Performance Data Analysis

After the framework testing data collection, general descriptive statistics and statistical analyses were calculated using Microsoft Excel and the IBM SPSS software. In total, 10 project performance metrics were considered in the scope of study. These 10 project performance measures were taken into account based on the feedback from the RT to be relevant and associated with EVMS based on experiential industry evidence and criticality for project success (other performance measures can be looked at by applying the same methodology). They are divided into continuous and discrete variables for the purpose of applying appropriate statistical tests. The discrete variables included: (1) meeting business objectives, (2) customer satisfaction, and (3) proactive management using EVMS (these three were evaluated on a 1-5 scale, ranging from 1 “very unsuccessful” to 5, “very successful” looking backward to the period post project completion), and (4) compliance to EVMS guidelines (e.g.,

NDIA 2018a): a simple yes or no answer tied to 20 percent project completion. In addition to the EVMS maturity score, the continuous variables evaluated included: (1) final cost performance index (CPI), (2) cost growth, (3) cost growth excluding change orders, (4) schedule growth, (5) change absolute value (all applied to the completed project), and (6) contingency applied to the project at 20 percent completion. The last five were calculated in the following equations, where

PMB is performance measurement baseline as it existed at 20 percent completion for the project.

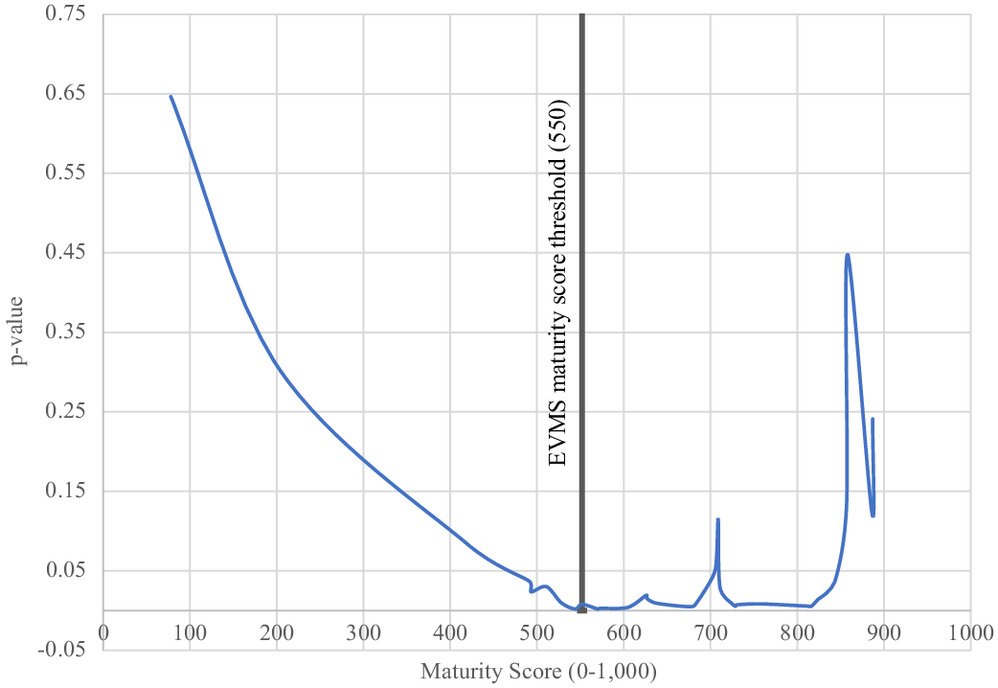

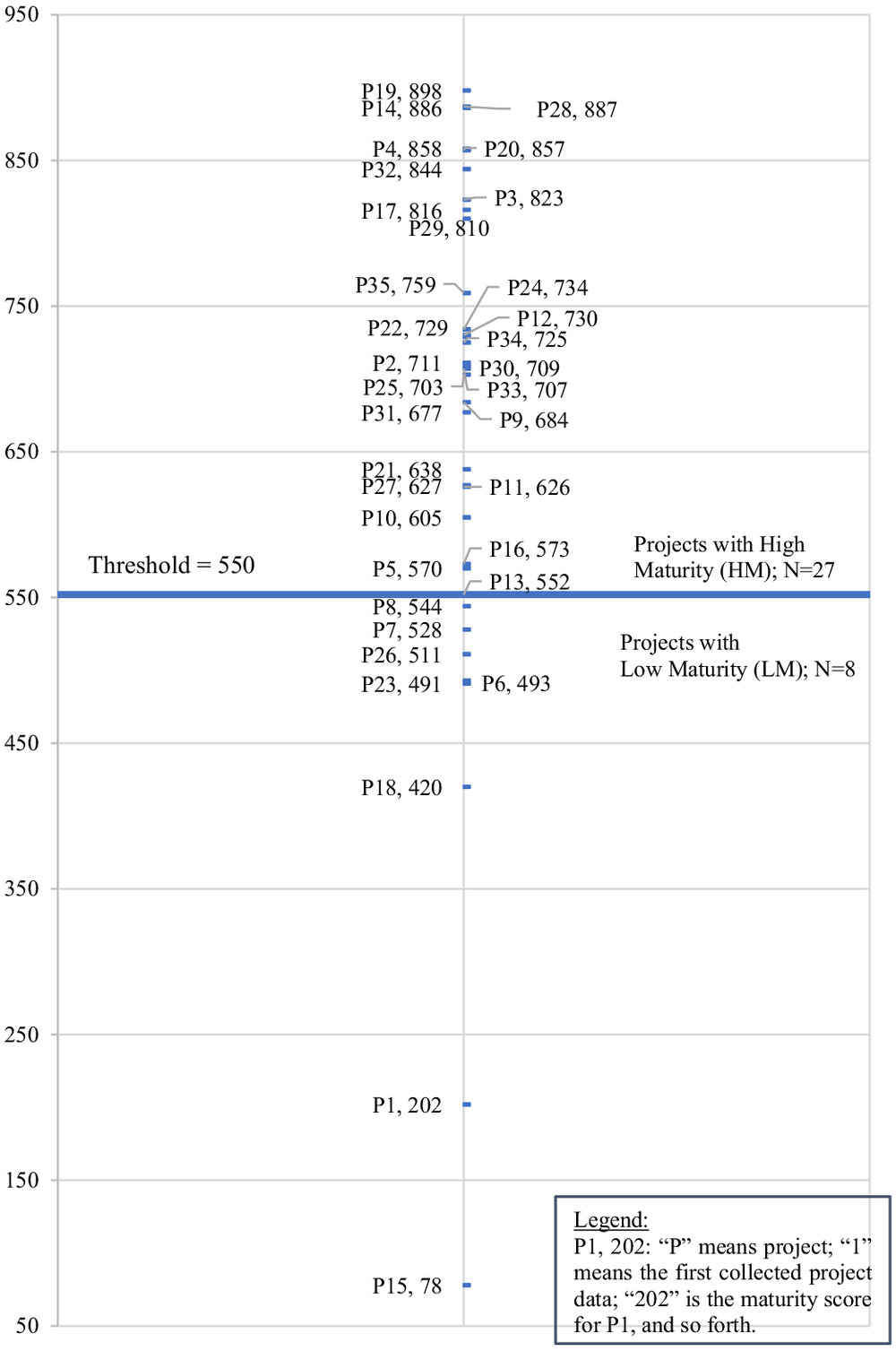

To identify the impact of EVMS maturity on project performance, a multi-series analyses was performed. First, a step-wise analysis (

Yussef et al. 2019;

El Asmar et al. 2018) split the projects into two subgroups: projects with high EVMS maturity (HM) and projects with low EVMS maturity (LM) (for brevity, authors use the term

HM projects to refer to the projects with EVMS subprocesses that are mature and

LM projects to those with subprocesses that have low maturity). The purpose of classifying projects in these two subsets was to study the differences between them in terms of project performance metrics. Second, the authors checked for normality of the data distribution in each subgroup, and accordingly, a valid statistical test was chosen to check for significant differences. In the case where normality test failed, the nonparametric Mann-Whitney U-test was conducted (

Corder and Foreman 2014). When the normality test passed, an independent t-test was used to compare the two subgroups (

Corder and Foreman 2014;

McCrum-Gardner 2008). In both cases, the null hypothesis is that no difference exists between HM and LM projects for each variable. Regarding the discrete variables, Mann-Whitney U-tests were done between GE and HM projects. Third, the authors checked for correlation; the null hypotheses were that a relationship does not exist between the maturity score and each of the continuous variables. All of the results of the data analyses are given in the following sections.